kwimage package¶

Subpackages¶

- kwimage.algo package

- kwimage.cli package

- kwimage.gis package

- kwimage.structs package

- Submodules

- kwimage.structs._generic module

- kwimage.structs.boxes module

- kwimage.structs.coords module

- kwimage.structs.detections module

- kwimage.structs.heatmap module

- kwimage.structs.mask module

- kwimage.structs.points module

- kwimage.structs.polygon module

- kwimage.structs.segmentation module

- kwimage.structs.single_box module

- Module contents

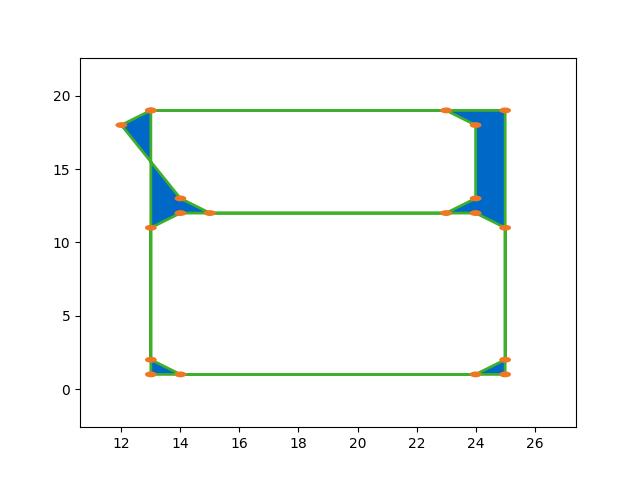

BoxBox.formatBox.dataBox.random()Box.from_slice()Box.from_shapely()Box.from_dsize()Box.from_data()Box.coerce()Box.dsizeBox.translate()Box.warp()Box.scale()Box.clip()Box.quantize()Box.copy()Box.round()Box.pad()Box.resize()Box.intersection()Box.union_hull()Box.to_ltrb()Box.to_xywh()Box.to_cxywh()Box.toformat()Box.astype()Box.corners()Box.to_boxes()Box.aspect_ratioBox.centerBox.center_xBox.center_yBox.widthBox.heightBox.tl_xBox.tl_yBox.br_xBox.br_yBox.dtypeBox.areaBox.to_slice()Box.to_shapely()Box.to_polygon()Box.to_coco()Box.draw_on()Box.draw()

BoxesBoxes.random()Boxes.copy()Boxes.concatenate()Boxes.compress()Boxes.take()Boxes.is_tensor()Boxes.is_numpy()Boxes._implBoxes.deviceBoxes.astype()Boxes.round()Boxes.quantize()Boxes.numpy()Boxes.tensor()Boxes.ious()Boxes.iooas()Boxes.isect_area()Boxes.intersection()Boxes.union_hull()Boxes.bounding_box()Boxes.contains()Boxes.view()Boxes._ensure_nonnegative_extent()

CoordsCoords.dtypeCoords.dimCoords.shapeCoords.copy()Coords.random()Coords.is_numpy()Coords.is_tensor()Coords.compress()Coords.take()Coords.astype()Coords.round()Coords.view()Coords.concatenate()Coords.deviceCoords._implCoords.tensor()Coords.numpy()Coords.reorder_axes()Coords.warp()Coords._warp_imgaug()Coords.to_imgaug()Coords.from_imgaug()Coords.scale()Coords.translate()Coords.rotate()Coords._rectify_about()Coords.fill()Coords.soft_fill()Coords.draw_on()Coords.draw()

DetectionsDetections.copy()Detections.coerce()Detections.from_coco_annots()Detections.to_coco()Detections.boxesDetections.class_idxsDetections.scoresDetections.probsDetections.weightsDetections.classesDetections.num_boxes()Detections.warp()Detections.scale()Detections.translate()Detections.concatenate()Detections.argsort()Detections.sort()Detections.compress()Detections.take()Detections.deviceDetections.is_tensor()Detections.is_numpy()Detections.numpy()Detections.dtypeDetections.tensor()Detections.demo()Detections.random()

HeatmapMaskMask.dtypeMask.random()Mask.demo()Mask.from_text()Mask.copy()Mask.union()Mask.intersection()Mask.shapeMask.areaMask.get_patch()Mask.get_xywh()Mask.bounding_box()Mask.get_polygon()Mask.to_mask()Mask.to_boxes()Mask.to_multi_polygon()Mask.get_convex_hull()Mask.iou()Mask.coerce()Mask._to_coco()Mask.to_coco()

MaskListMultiPolygonMultiPolygon.random()MultiPolygon.fill()MultiPolygon.to_multi_polygon()MultiPolygon.to_boxes()MultiPolygon.to_box()MultiPolygon.bounding_box()MultiPolygon.box()MultiPolygon.to_mask()MultiPolygon.to_relative_mask()MultiPolygon.coerce()MultiPolygon.to_shapely()MultiPolygon.from_shapely()MultiPolygon.from_geojson()MultiPolygon.to_geojson()MultiPolygon.from_coco()MultiPolygon._to_coco()MultiPolygon.to_coco()MultiPolygon.swap_axes()MultiPolygon.draw_on()

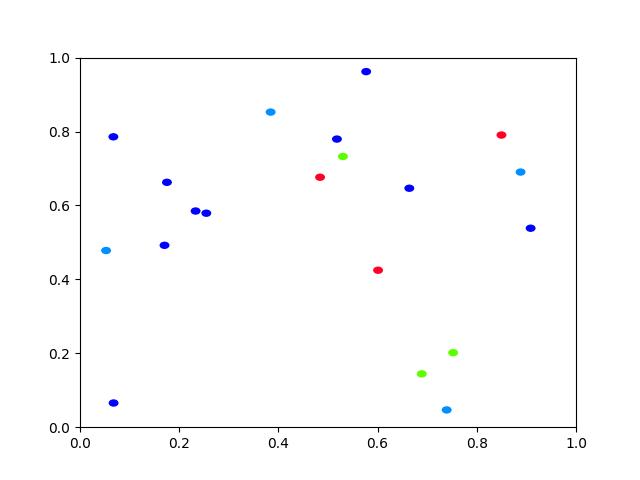

PointsPoints.shapePoints.xyPoints.random()Points.is_numpy()Points.is_tensor()Points._implPoints.tensor()Points.round()Points.numpy()Points.draw_on()Points.draw()Points.compress()Points.take()Points.concatenate()Points.to_coco()Points._to_coco()Points.coerce()Points._from_coco()Points.from_coco()

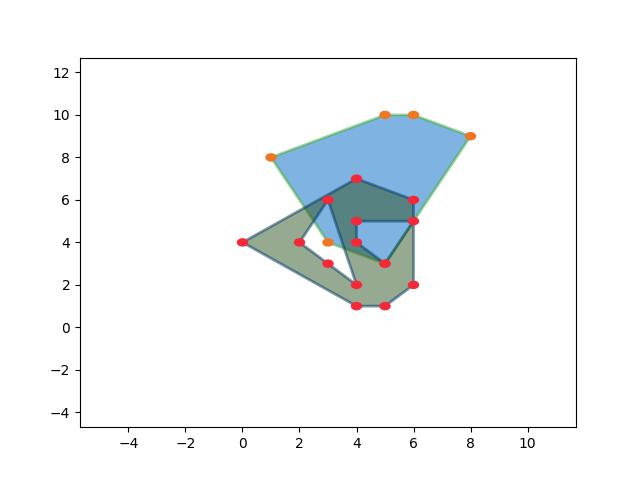

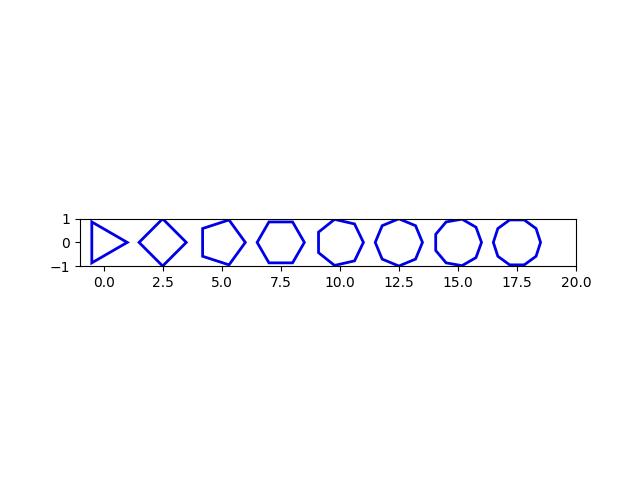

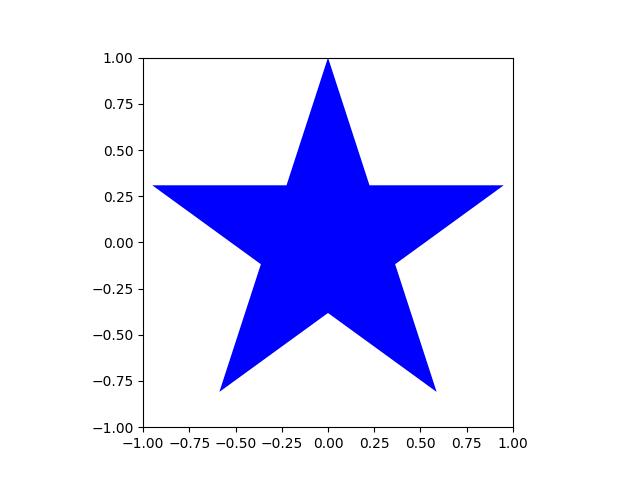

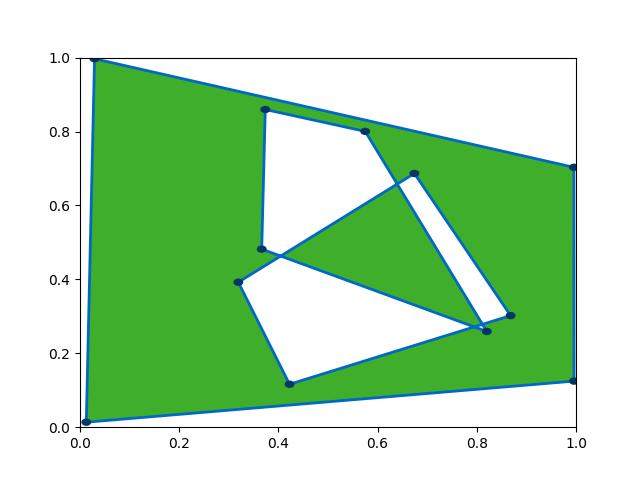

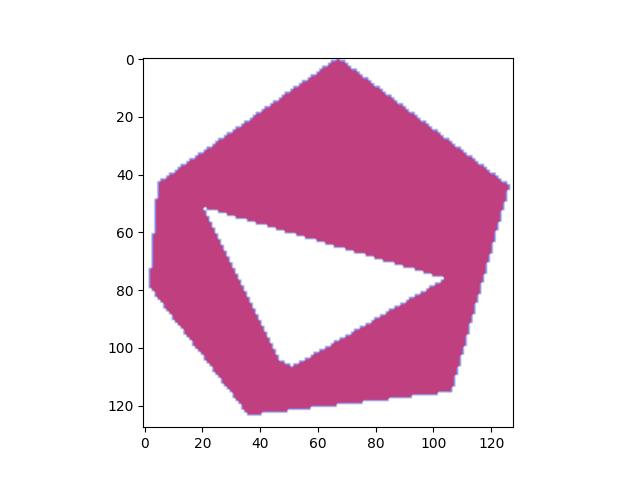

PointsListPolygonPolygon.exteriorPolygon.interiorsPolygon.circle()Polygon.regular()Polygon.star()Polygon.random()Polygon._implPolygon.to_mask()Polygon.to_relative_mask()Polygon._to_cv_countours()Polygon.coerce()Polygon.from_shapely()Polygon.from_wkt()Polygon.from_geojson()Polygon.to_shapely()Polygon.to_geojson()Polygon.to_wkt()Polygon.from_coco()Polygon._to_coco()Polygon.to_coco()Polygon.to_multi_polygon()Polygon.to_boxes()Polygon.centroidPolygon.to_box()Polygon.bounding_box()Polygon.box()Polygon.bounding_box_polygon()Polygon.copy()Polygon.clip()Polygon.fill()Polygon.draw_on()Polygon.draw()Polygon._ensure_vertex_order()Polygon.interpolate()Polygon.morph()

PolygonListSegmentationSegmentationListsmooth_prob()

- Submodules

Submodules¶

- kwimage._im_color_data module

- kwimage._internal module

- kwimage.im_alphablend module

- kwimage.im_color module

ColorColor.coerce()Color.forimage()Color._forimage()Color.ashex()Color.as255()Color.as01()Color._is_base01()Color._is_base255()Color._hex_to_01()Color._ensure_color01()Color._255_to_01()Color._string_to_01()Color.named_colors()Color.distinct()Color.random()Color.distance()Color.interpolate()Color.to_image()Color.adjust()

- kwimage.im_core module

- kwimage.im_cv2 module

- kwimage.im_demodata module

- kwimage.im_draw module

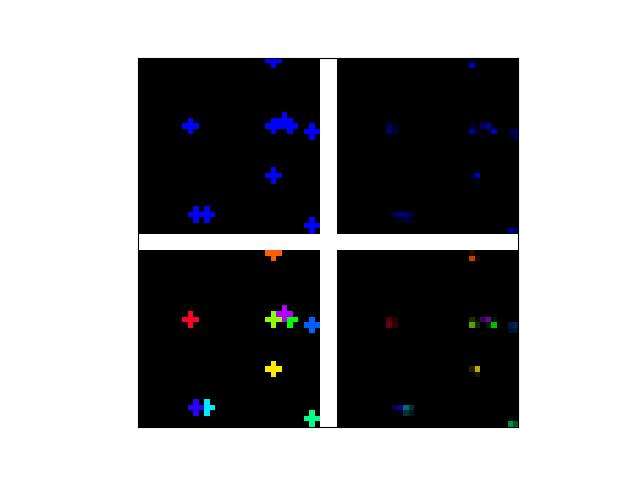

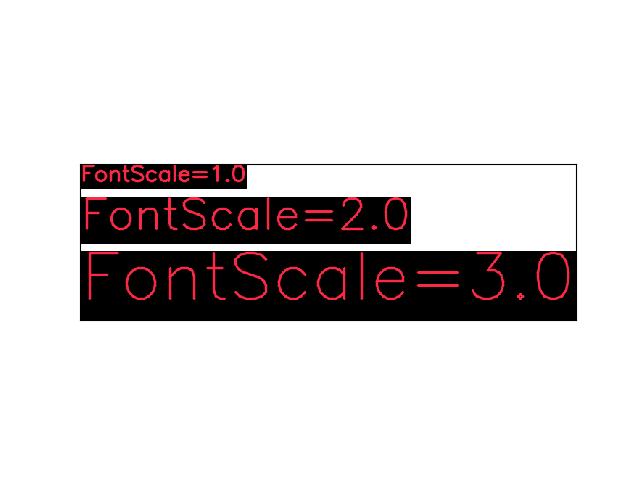

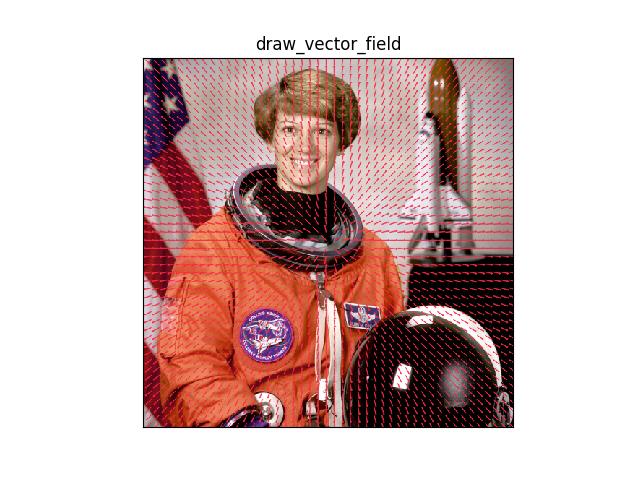

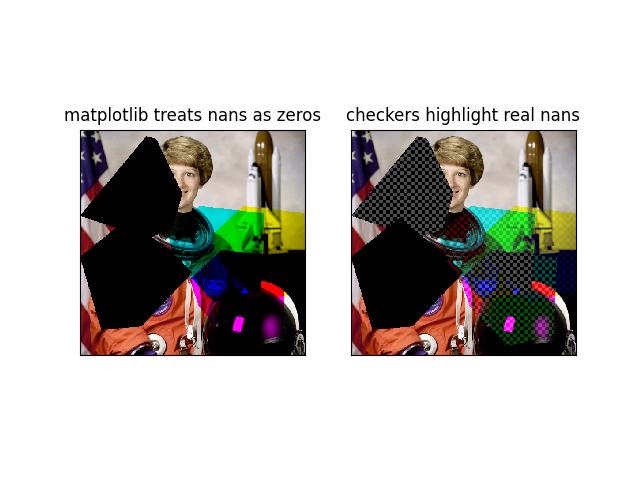

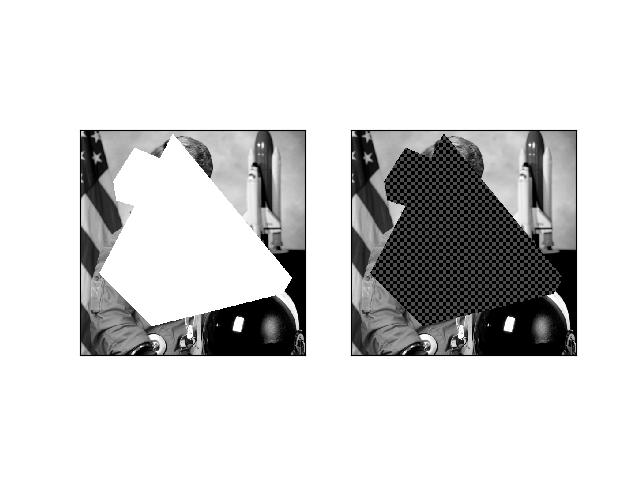

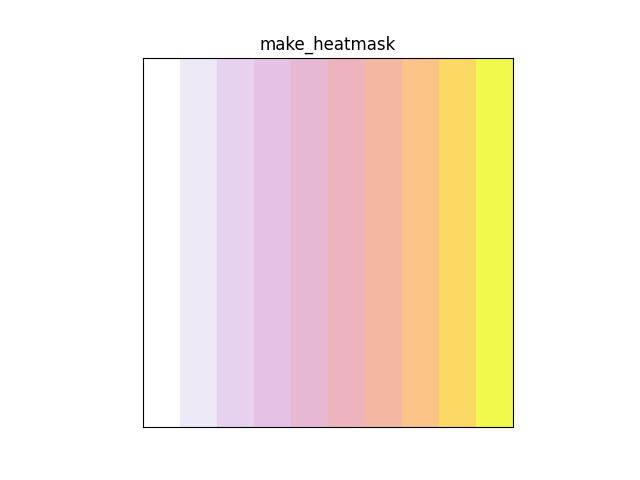

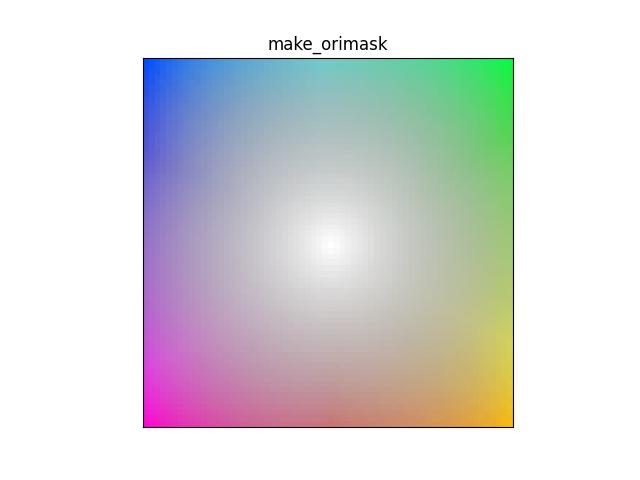

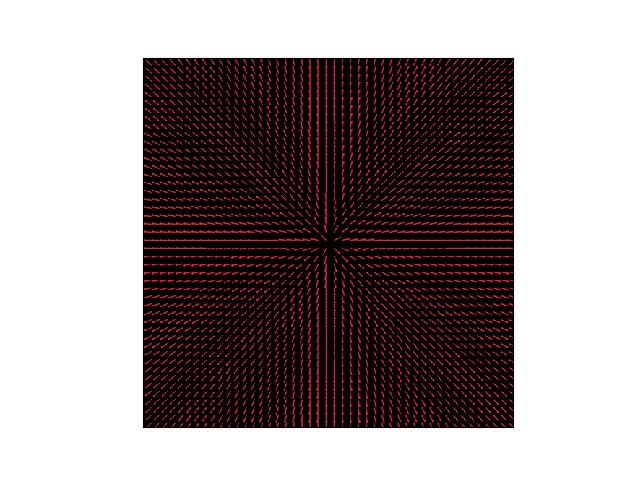

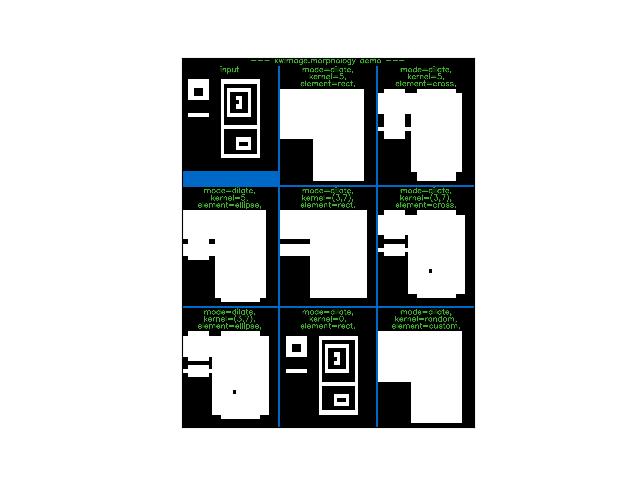

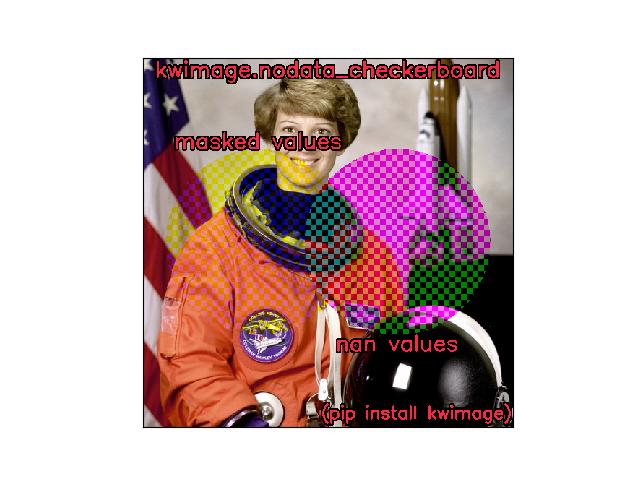

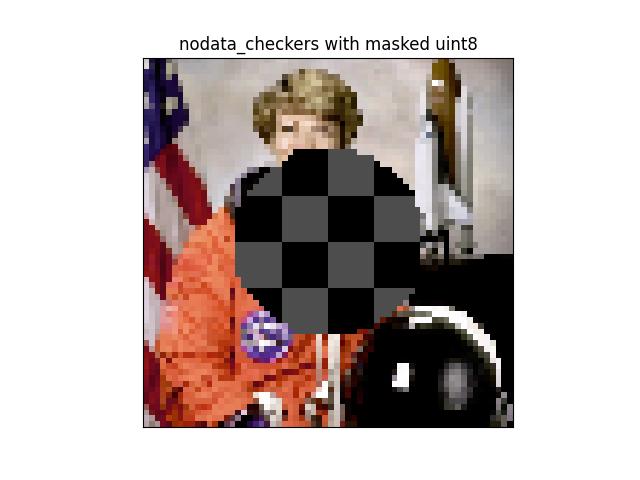

_draw_text_on_image_pil()draw_text_on_image()_text_sizes()draw_clf_on_image()draw_boxes_on_image()draw_line_segments_on_image()_broadcast_colors()make_heatmask()make_orimask()make_vector_field()draw_vector_field()draw_header_text()fill_nans_with_checkers()_masked_checkerboard()nodata_checkerboard()

- kwimage.im_filter module

- kwimage.im_io module

- kwimage.im_runlen module

- kwimage.im_stack module

- kwimage.transform module

TransformMatrixLinearAffineAffine.shapeAffine.concise()Affine.from_shapely()Affine.from_affine()Affine.from_gdal()Affine.from_skimage()Affine.coerce()Affine.eccentricity()Affine.to_affine()Affine.to_gdal()Affine.to_shapely()Affine.to_skimage()Affine.scale()Affine.translate()Affine._scale_translate()Affine.rotate()Affine.random()Affine.random_params()Affine.decompose()Affine.affine()Affine.fit()Affine.fliprot()

Projective

- kwimage.util_warp module

_coordinate_grid()warp_tensor()subpixel_align()subpixel_set()subpixel_accum()subpixel_maximum()subpixel_minimum()subpixel_slice()subpixel_translate()_padded_slice()_ensure_arraylike()_rectify_slice()_warp_tensor_cv2()warp_points()remove_homog()add_homog()subpixel_getvalue()subpixel_setvalue()_bilinear_coords()

Module contents¶

The Kitware Image Module (kwimage) contains functions to accomplish lower-level image operations via a high level API.

Read the docs |

|

Gitlab (main) |

|

Github (mirror) |

|

Pypi |

Module features:

Image reader / writer functions with multiple backends

Wrapers around opencv that simplify and extend its functionality

Annotation datastructure with configurable backends.

Many function have awareness of torch tensors and can be used interchangably with ndarrays.

Misc image manipulation functions

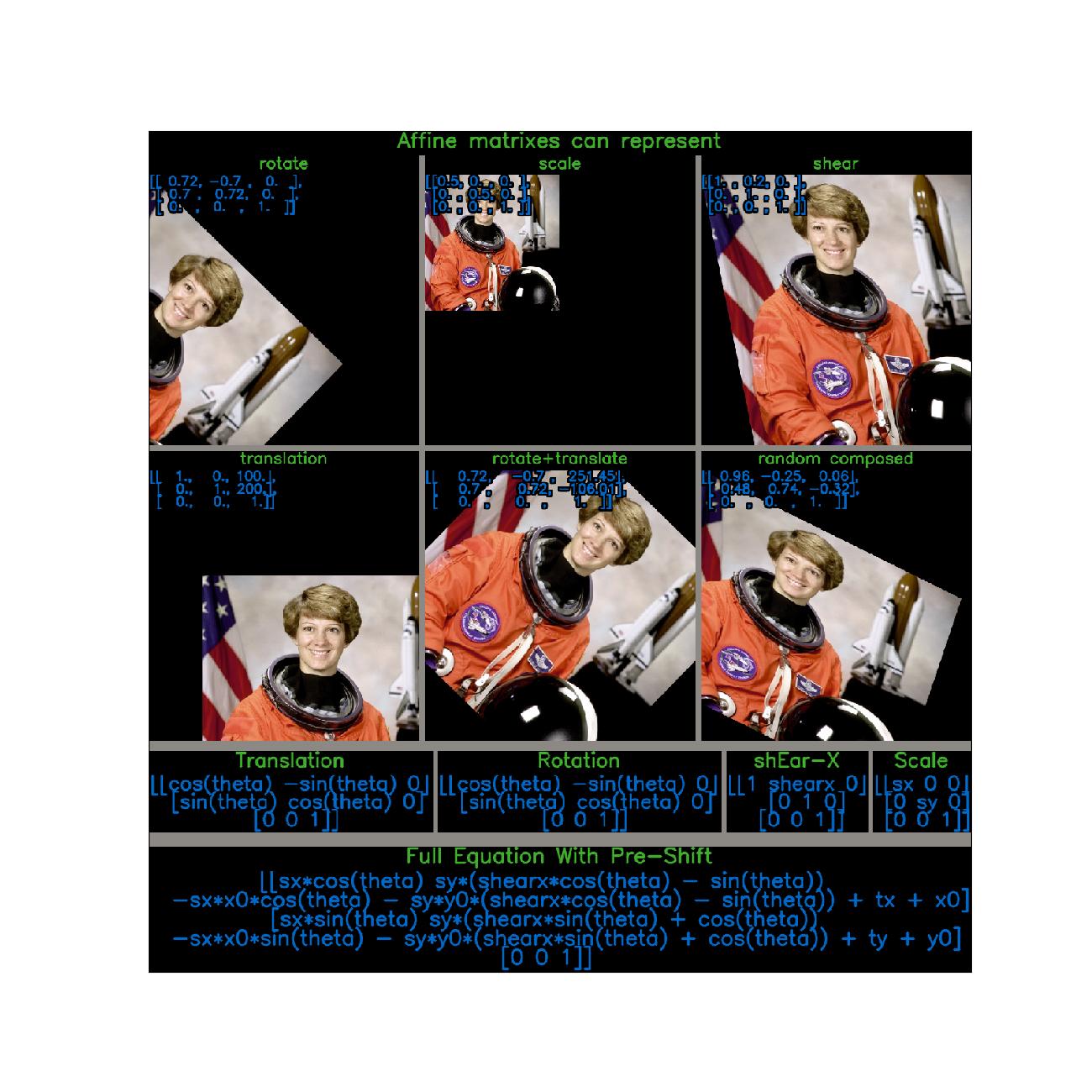

- class kwimage.Affine(matrix)[source]¶

Bases:

ProjectiveA thin wraper around a 3x3 matrix that represents an affine transform

- Implements methods for:

creating random affine transforms

decomposing the matrix

finding a best-fit transform between corresponding points

TODO: - [ ] fully rational transform

Example

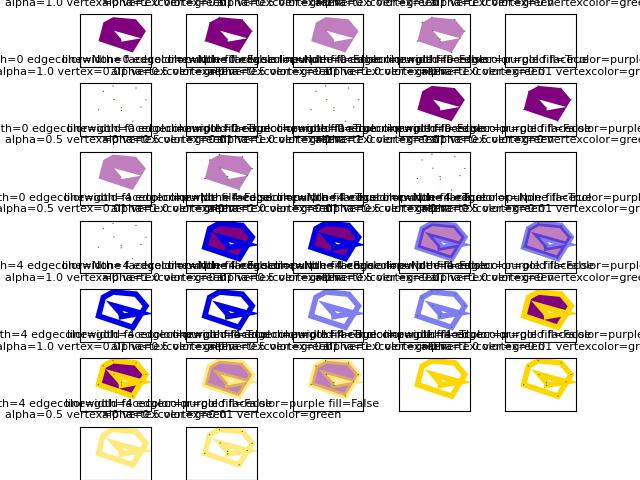

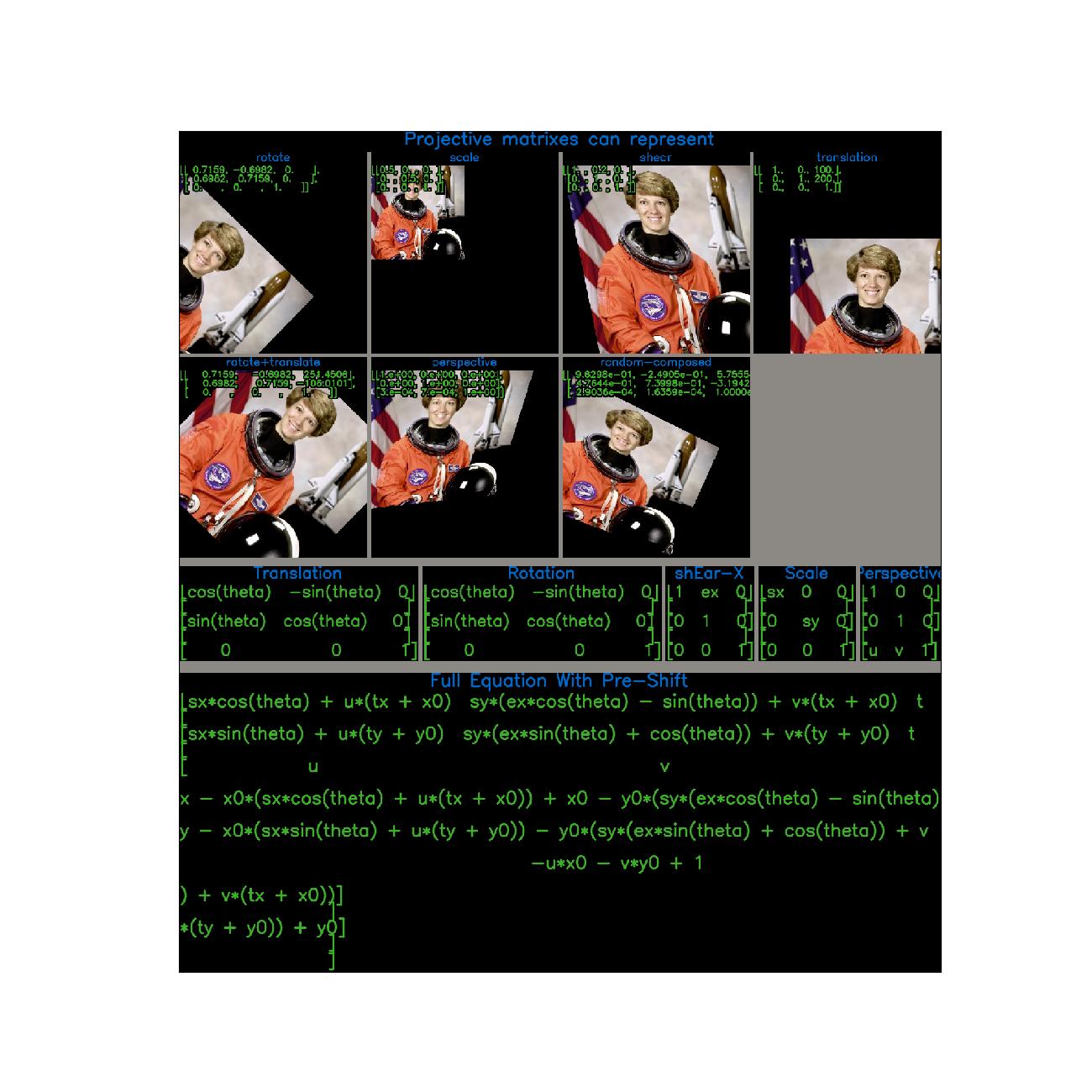

>>> import kwimage >>> import math >>> image = kwimage.grab_test_image() >>> theta = 0.123 * math.tau >>> components = { >>> 'rotate': kwimage.Affine.affine(theta=theta), >>> 'scale': kwimage.Affine.affine(scale=0.5), >>> 'shear': kwimage.Affine.affine(shearx=0.2), >>> 'translation': kwimage.Affine.affine(offset=(100, 200)), >>> 'rotate+translate': kwimage.Affine.affine(theta=0.123 * math.tau, about=(256, 256)), >>> 'random composed': kwimage.Affine.random(scale=(0.5, 1.5), translate=(-20, 20), theta=(-theta, theta), shearx=(0, .4), rng=900558176210808600), >>> } >>> warp_stack = [] >>> for key, aff in components.items(): ... warp = kwimage.warp_affine(image, aff) ... warp = kwimage.draw_text_on_image( ... warp, ... ub.urepr(aff.matrix, nl=1, nobr=1, precision=2, si=1, sv=1, with_dtype=0), ... org=(1, 1), ... valign='top', halign='left', ... fontScale=0.8, color='kw_blue', ... border={'thickness': 3}, ... ) ... warp = kwimage.draw_header_text(warp, key, color='kw_green') ... warp_stack.append(warp) >>> warp_canvas = kwimage.stack_images_grid(warp_stack, chunksize=3, pad=10, bg_value='kitware_gray') >>> # xdoctest: +REQUIRES(module:sympy) >>> import sympy >>> # Shows the symbolic construction of the code >>> # https://groups.google.com/forum/#!topic/sympy/k1HnZK_bNNA >>> from sympy.abc import theta >>> params = x0, y0, sx, sy, theta, shearx, tx, ty = sympy.symbols( >>> 'x0, y0, sx, sy, theta, shearx, tx, ty') >>> theta = sympy.symbols('theta') >>> # move the center to 0, 0 >>> tr1_ = np.array([[1, 0, -x0], >>> [0, 1, -y0], >>> [0, 0, 1]]) >>> # Define core components of the affine transform >>> S = np.array([ # scale >>> [sx, 0, 0], >>> [ 0, sy, 0], >>> [ 0, 0, 1]]) >>> E = np.array([ # x-shear >>> [1, shearx, 0], >>> [0, 1, 0], >>> [0, 0, 1]]) >>> R = np.array([ # rotation >>> [sympy.cos(theta), -sympy.sin(theta), 0], >>> [sympy.sin(theta), sympy.cos(theta), 0], >>> [ 0, 0, 1]]) >>> T = np.array([ # translation >>> [ 1, 0, tx], >>> [ 0, 1, ty], >>> [ 0, 0, 1]]) >>> # Contruct the affine 3x3 about the origin >>> aff0 = np.array(sympy.simplify(T @ R @ E @ S)) >>> # move 0, 0 back to the specified origin >>> tr2_ = np.array([[1, 0, x0], >>> [0, 1, y0], >>> [0, 0, 1]]) >>> # combine transformations >>> aff = tr2_ @ aff0 @ tr1_ >>> print('aff = {}'.format(ub.urepr(aff.tolist(), nl=1))) >>> # This could be prettier >>> texts = { >>> 'Translation': sympy.pretty(R), >>> 'Rotation': sympy.pretty(R), >>> 'shEar-X': sympy.pretty(E), >>> 'Scale': sympy.pretty(S), >>> } >>> print(ub.urepr(texts, nl=2, sv=1)) >>> equation_stack = [] >>> for text, m in texts.items(): >>> render_canvas = kwimage.draw_text_on_image(None, m, color='kw_blue', fontScale=1.0) >>> render_canvas = kwimage.draw_header_text(render_canvas, text, color='kw_green') >>> render_canvas = kwimage.imresize(render_canvas, scale=1.3) >>> equation_stack.append(render_canvas) >>> equation_canvas = kwimage.stack_images(equation_stack, pad=10, axis=1, bg_value='kitware_gray') >>> render_canvas = kwimage.draw_text_on_image(None, sympy.pretty(aff), color='kw_blue', fontScale=1.0) >>> render_canvas = kwimage.draw_header_text(render_canvas, 'Full Equation With Pre-Shift', color='kw_green') >>> # xdoctest: -REQUIRES(module:sympy) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> plt = kwplot.autoplt() >>> canvas = kwimage.stack_images([warp_canvas, equation_canvas, render_canvas], pad=20, axis=0, bg_value='kitware_gray', resize='larger') >>> canvas = kwimage.draw_header_text(canvas, 'Affine matrixes can represent', color='kw_green') >>> kwplot.imshow(canvas) >>> fig = plt.gcf() >>> fig.set_size_inches(13, 13)

Example

>>> import kwimage >>> self = kwimage.Affine(np.eye(3)) >>> m1 = np.eye(3) @ self >>> m2 = self @ np.eye(3)

Example

>>> from kwimage.transform import * # NOQA >>> m = {} >>> # Works, and returns a Affine >>> m[len(m)] = x = Affine.random() @ np.eye(3) >>> assert isinstance(x, Affine) >>> m[len(m)] = x = Affine.random() @ None >>> assert isinstance(x, Affine) >>> # Works, and returns an ndarray >>> m[len(m)] = x = np.eye(3) @ Affine.random() >>> assert isinstance(x, np.ndarray) >>> # Works, and returns an Matrix >>> m[len(m)] = x = Affine.random() @ Matrix.random(3) >>> assert isinstance(x, Matrix) >>> m[len(m)] = x = Matrix.random(3) @ Affine.random() >>> assert isinstance(x, Matrix) >>> print('m = {}'.format(ub.urepr(m)))

- property shape¶

- concise()[source]¶

Return a concise coercable dictionary representation of this matrix

- Returns:

- a small serializable dict that can be passed

to

Affine.coerce()to reconstruct this object.

- Return type:

- Returns:

dictionary with consise parameters

- Return type:

Dict

Example

>>> import kwimage >>> self = kwimage.Affine.random(rng=0, scale=1) >>> params = self.concise() >>> assert np.allclose(Affine.coerce(params).matrix, self.matrix) >>> print('params = {}'.format(ub.urepr(params, nl=1, precision=2))) params = { 'offset': (0.08, 0.38), 'theta': 0.08, 'type': 'affine', }

Example

>>> import kwimage >>> self = kwimage.Affine.random(rng=0, scale=2, offset=0) >>> params = self.concise() >>> assert np.allclose(Affine.coerce(params).matrix, self.matrix) >>> print('params = {}'.format(ub.urepr(params, nl=1, precision=2))) params = { 'scale': 2.00, 'theta': 0.04, 'type': 'affine', }

- classmethod from_shapely(sh_aff)[source]¶

Shapely affine tuples are in the format (a, b, d, e, x, y)

- classmethod coerce(data=None, **kwargs)[source]¶

Attempt to coerce the data into an affine object

- Parameters:

data – some data we attempt to coerce to an Affine matrix

**kwargs – some data we attempt to coerce to an Affine matrix, mutually exclusive with data.

- Returns:

Affine

Example

>>> import kwimage >>> import skimage.transform >>> kwimage.Affine.coerce({'type': 'affine', 'matrix': [[1, 0, 0], [0, 1, 0]]}) >>> kwimage.Affine.coerce({'scale': 2}) >>> kwimage.Affine.coerce({'offset': 3}) >>> kwimage.Affine.coerce(np.eye(3)) >>> kwimage.Affine.coerce(None) >>> kwimage.Affine.coerce({}) >>> kwimage.Affine.coerce(skimage.transform.AffineTransform(scale=30))

- eccentricity()[source]¶

Eccentricity of the ellipse formed by this affine matrix

- Returns:

- large when there are big scale differences in principle

directions or skews.

- Return type:

References

[WikiConic]Example

>>> import kwimage >>> kwimage.Affine.random(rng=432).eccentricity()

- to_gdal()[source]¶

Convert to a gdal tuple (c, a, b, f, d, e)

- Returns:

Tuple[float, float, float, float, float, float]

- to_shapely()[source]¶

Returns a matrix suitable for shapely.affinity.affine_transform

- Returns:

Tuple[float, float, float, float, float, float]

Example

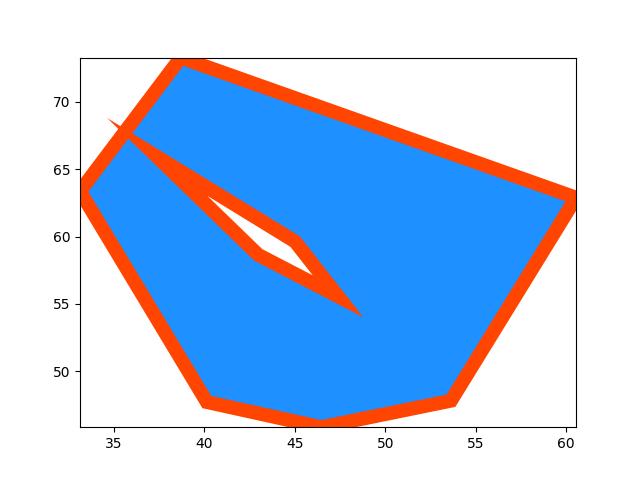

>>> import kwimage >>> self = kwimage.Affine.random() >>> sh_transform = self.to_shapely() >>> # Transform points with kwimage and shapley >>> import shapely >>> from shapely.affinity import affine_transform >>> kw_poly = kwimage.Polygon.random() >>> kw_warp_poly = kw_poly.warp(self) >>> sh_poly = kw_poly.to_shapely() >>> sh_warp_poly = affine_transform(sh_poly, sh_transform) >>> kw_warp_poly_recon = kwimage.Polygon.from_shapely(sh_warp_poly) >>> assert np.allclose(kw_warp_poly_recon.exterior.data, kw_warp_poly_recon.exterior.data)

- to_skimage()[source]¶

- Returns:

skimage.transform.AffineTransform

Example

>>> import kwimage >>> self = kwimage.Affine.random() >>> tf = self.to_skimage() >>> # Transform points with kwimage and scikit-image >>> kw_poly = kwimage.Polygon.random() >>> kw_warp_xy = kw_poly.warp(self.matrix).exterior.data >>> sk_warp_xy = tf(kw_poly.exterior.data) >>> assert np.allclose(sk_warp_xy, sk_warp_xy)

- classmethod scale(scale)[source]¶

Create a scale Affine object

- Parameters:

scale (float | Tuple[float, float]) – x, y scale factor

- Returns:

Affine

- classmethod translate(offset)[source]¶

Create a translation Affine object

- Parameters:

offset (float | Tuple[float, float]) – x, y translation factor

- Returns:

Affine

Benchmark

>>> # xdoctest: +REQUIRES(--benchmark) >>> # It is ~3x faster to use the more specific method >>> import timerit >>> import kwimage >>> # >>> offset = np.random.rand(2) >>> ti = timerit.Timerit(100, bestof=10, verbose=2) >>> for timer in ti.reset('time'): >>> with timer: >>> kwimage.Affine.translate(offset) >>> # >>> for timer in ti.reset('time'): >>> with timer: >>> kwimage.Affine.affine(offset=offset)

- classmethod rotate(theta)[source]¶

Create a rotation Affine object

- Parameters:

theta (float) – counter-clockwise rotation angle in radians

- Returns:

Affine

- classmethod random(shape=None, rng=None, **kw)[source]¶

Create a random Affine object

- Parameters:

rng – random number generator

**kw – passed to

Affine.random_params(). can contain coercable random distributions for scale, offset, about, theta, and shearx.

- Returns:

Affine

- classmethod random_params(rng=None, **kw)[source]¶

- Parameters:

rng – random number generator

**kw – can contain coercable random distributions for scale, offset, about, theta, and shearx.

- Returns:

affine parameters suitable to be passed to Affine.affine

- Return type:

Dict

Todo

[ ] improve kwargs parameterization

- decompose()[source]¶

Decompose the affine matrix into its individual scale, translation, rotation, and skew parameters.

- Returns:

decomposed offset, scale, theta, and shearx params

- Return type:

Dict

References

[SE3521141][SE70357473]https://stackoverflow.com/questions/70357473/how-to-decompose-a-2x2-affine-matrix-with-sympy

[WikiTranMat][WikiShear]Example

>>> from kwimage.transform import * # NOQA >>> self = Affine.random() >>> params = self.decompose() >>> recon = Affine.coerce(**params) >>> params2 = recon.decompose() >>> pt = np.vstack([np.random.rand(2, 1), [1]]) >>> result1 = self.matrix[0:2] @ pt >>> result2 = recon.matrix[0:2] @ pt >>> assert np.allclose(result1, result2)

>>> self = Affine.scale(0.001) @ Affine.random() >>> params = self.decompose() >>> self.det()

Example

>>> # xdoctest: +REQUIRES(module:sympy) >>> # Test decompose with symbolic matrices >>> from kwimage.transform import * # NOQA >>> self = Affine.random().rationalize() >>> self.decompose()

Example

>>> # xdoctest: +REQUIRES(module:pandas) >>> from kwimage.transform import * # NOQA >>> import kwimage >>> import pandas as pd >>> # Test consistency of decompose + reconstruct >>> param_grid = list(ub.named_product({ >>> 'theta': np.linspace(-4 * np.pi, 4 * np.pi, 3), >>> 'shearx': np.linspace(- 10 * np.pi, 10 * np.pi, 4), >>> })) >>> def normalize_angle(radian): >>> return np.arctan2(np.sin(radian), np.cos(radian)) >>> for pextra in param_grid: >>> params0 = dict(scale=(3.05, 3.07), offset=(10.5, 12.1), **pextra) >>> self = recon0 = kwimage.Affine.affine(**params0) >>> self.decompose() >>> # Test drift with multiple decompose / reconstructions >>> params_list = [params0] >>> recon_list = [recon0] >>> n = 4 >>> for _ in range(n): >>> prev = recon_list[-1] >>> params = prev.decompose() >>> recon = kwimage.Affine.coerce(**params) >>> params_list.append(params) >>> recon_list.append(recon) >>> params_df = pd.DataFrame(params_list) >>> #print('params_list = {}'.format(ub.urepr(params_list, nl=1, precision=5))) >>> print(params_df) >>> assert ub.allsame(normalize_angle(params_df['theta']), eq=np.isclose) >>> assert ub.allsame(params_df['shearx'], eq=np.allclose) >>> assert ub.allsame(params_df['scale'], eq=np.allclose) >>> assert ub.allsame(params_df['offset'], eq=np.allclose)

- classmethod affine(scale=None, offset=None, theta=None, shear=None, about=None, shearx=None, array_cls=None, math_mod=None, **kwargs)[source]¶

Create an affine matrix from high-level parameters

- Parameters:

scale (float | Tuple[float, float]) – x, y scale factor

offset (float | Tuple[float, float]) – x, y translation factor

theta (float) – counter-clockwise rotation angle in radians

shearx (float) – shear factor parallel to the x-axis.

about (float | Tuple[float, float]) – x, y location of the origin

shear (float) – BROKEN, dont use. counter-clockwise shear angle in radians

Todo

- [ ] Add aliases? -

origin : alias for about rotation : alias for theta translation : alias for offset

- Returns:

the constructed Affine object

- Return type:

Example

>>> from kwimage.transform import * # NOQA >>> rng = kwarray.ensure_rng(None) >>> scale = rng.randn(2) * 10 >>> offset = rng.randn(2) * 10 >>> about = rng.randn(2) * 10 >>> theta = rng.randn() * 10 >>> shearx = rng.randn() * 10 >>> # Create combined matrix from all params >>> F = Affine.affine( >>> scale=scale, offset=offset, theta=theta, shearx=shearx, >>> about=about) >>> # Test that combining components matches >>> S = Affine.affine(scale=scale) >>> T = Affine.affine(offset=offset) >>> R = Affine.affine(theta=theta) >>> E = Affine.affine(shearx=shearx) >>> O = Affine.affine(offset=about) >>> # combine (note shear must be on the RHS of rotation) >>> alt = O @ T @ R @ E @ S @ O.inv() >>> print('F = {}'.format(ub.urepr(F.matrix.tolist(), nl=1))) >>> print('alt = {}'.format(ub.urepr(alt.matrix.tolist(), nl=1))) >>> assert np.all(np.isclose(alt.matrix, F.matrix)) >>> pt = np.vstack([np.random.rand(2, 1), [[1]]]) >>> warp_pt1 = (F.matrix @ pt) >>> warp_pt2 = (alt.matrix @ pt) >>> assert np.allclose(warp_pt2, warp_pt1)

Sympy

>>> # xdoctest: +SKIP >>> import sympy >>> # Shows the symbolic construction of the code >>> # https://groups.google.com/forum/#!topic/sympy/k1HnZK_bNNA >>> from sympy.abc import theta >>> params = x0, y0, sx, sy, theta, shearx, tx, ty = sympy.symbols( >>> 'x0, y0, sx, sy, theta, shearx, tx, ty') >>> # move the center to 0, 0 >>> tr1_ = np.array([[1, 0, -x0], >>> [0, 1, -y0], >>> [0, 0, 1]]) >>> # Define core components of the affine transform >>> S = np.array([ # scale >>> [sx, 0, 0], >>> [ 0, sy, 0], >>> [ 0, 0, 1]]) >>> E = np.array([ # x-shear >>> [1, shearx, 0], >>> [0, 1, 0], >>> [0, 0, 1]]) >>> R = np.array([ # rotation >>> [sympy.cos(theta), -sympy.sin(theta), 0], >>> [sympy.sin(theta), sympy.cos(theta), 0], >>> [ 0, 0, 1]]) >>> T = np.array([ # translation >>> [ 1, 0, tx], >>> [ 0, 1, ty], >>> [ 0, 0, 1]]) >>> # Contruct the affine 3x3 about the origin >>> aff0 = np.array(sympy.simplify(T @ R @ E @ S)) >>> # move 0, 0 back to the specified origin >>> tr2_ = np.array([[1, 0, x0], >>> [0, 1, y0], >>> [0, 0, 1]]) >>> # combine transformations >>> aff = tr2_ @ aff0 @ tr1_ >>> print('aff = {}'.format(ub.urepr(aff.tolist(), nl=1)))

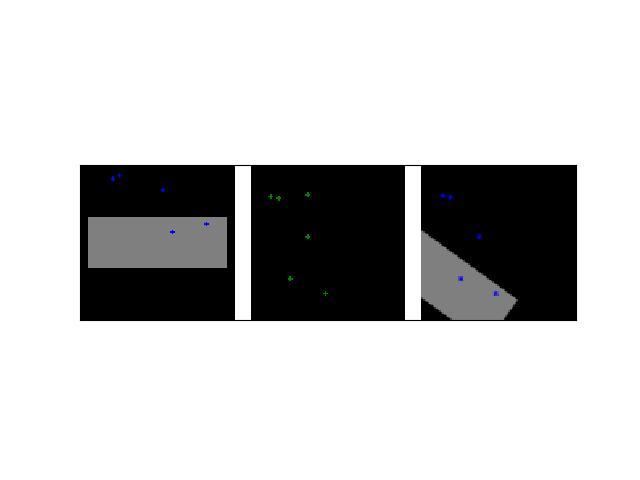

- classmethod fit(pts1, pts2)[source]¶

Fit an affine transformation between a set of corresponding points

- Parameters:

pts1 (ndarray) – An Nx2 array of points in “space 1”.

pts2 (ndarray) – A corresponding Nx2 array of points in “space 2”

- Returns:

a transform that warps from “space1” to “space2”.

- Return type:

Note

An affine matrix has 6 degrees of freedom, so at least 3 non-colinear xy-point pairs are needed.

References

..[Lowe04] https://www.cs.ubc.ca/~lowe/papers/ijcv04.pdf page 22

Example

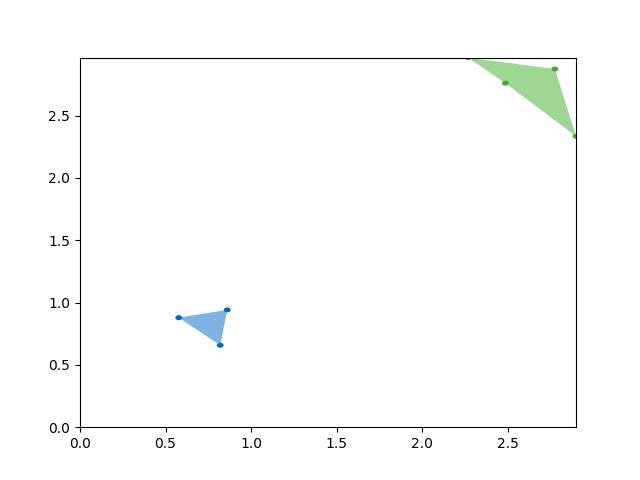

>>> # Create a set of points, warp them, then recover the warp >>> import kwimage >>> points = kwimage.Points.random(6).scale(64) >>> #A1 = kwimage.Affine.affine(scale=0.9, theta=-3.2, offset=(2, 3), about=(32, 32), skew=2.3) >>> #A2 = kwimage.Affine.affine(scale=0.8, theta=0.8, offset=(2, 0), about=(32, 32)) >>> A1 = kwimage.Affine.random() >>> A2 = kwimage.Affine.random() >>> A12_real = A2 @ A1.inv() >>> points1 = points.warp(A1) >>> points2 = points.warp(A2) >>> # Recover the warp >>> pts1, pts2 = points1.xy, points2.xy >>> A_recovered = kwimage.Affine.fit(pts1, pts2) >>> assert np.all(np.isclose(A_recovered.matrix, A12_real.matrix)) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> base1 = np.zeros((96, 96, 3)) >>> base1[32:-32, 5:-5] = 0.5 >>> base2 = np.zeros((96, 96, 3)) >>> img1 = points1.draw_on(base1, radius=3, color='blue') >>> img2 = points2.draw_on(base2, radius=3, color='green') >>> img1_warp = kwimage.warp_affine(img1, A_recovered) >>> canvas = kwimage.stack_images([img1, img2, img1_warp], pad=10, axis=1, bg_value=(1., 1., 1.)) >>> kwplot.imshow(canvas)

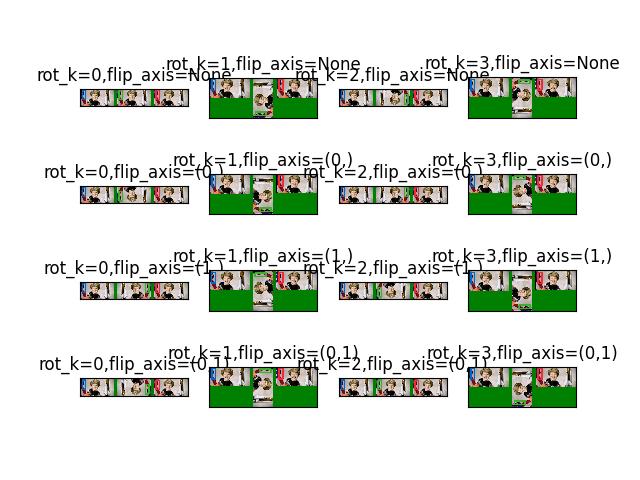

- classmethod fliprot(flip_axis=None, rot_k=0, axes=(0, 1), canvas_dsize=None)[source]¶

Creates a flip/rotation transform with respect to an image of a given size in the positive quadrent. (i.e. warped data within the specified canvas size will stay in the positive quadrant)

- Parameters:

flip_axis (int) – the axis dimension to flip. I.e. 0 flips the y-axis and 1-flips the x-axis.

rot_k (int) – number of counterclockwise 90 degree rotations that occur after the flips.

axes (Tuple[int, int]) – The axis ordering. Unhandled in this version. Dont change this.

canvas_dsize (Tuple[int, int]) – The width / height of the canvas the fliprot is applied in.

- Returns:

The affine matrix representing the canvas-aligned flip and rotation.

- Return type:

Note

Requiring that the image size is known makes this a place that errors could occur depending on your interpretation of pixels as points or areas. There is probably a better way to describe the issue, but the second doctest shows the issue when trying to use warp-affine’s auto-dsize feature. See [MR81] for details.

References

CommandLine

xdoctest -m kwimage.transform Affine.fliprot:0 --show xdoctest -m kwimage.transform Affine.fliprot:1 --show

Example

>>> import kwimage >>> H, W = 64, 128 >>> canvas_dsize = (W, H) >>> box1 = kwimage.Boxes.random(1).scale((W, H)).quantize() >>> ltrb = box1.data >>> rot_k = 4 >>> annot = box1 >>> annot = box1.to_polygons()[0] >>> annot1 = annot.copy() >>> # The first 8 are the cannonically unique group elements >>> fliprot_params = [ >>> {'rot_k': 0, 'flip_axis': None}, >>> {'rot_k': 1, 'flip_axis': None}, >>> {'rot_k': 2, 'flip_axis': None}, >>> {'rot_k': 3, 'flip_axis': None}, >>> {'rot_k': 0, 'flip_axis': (0,)}, >>> {'rot_k': 1, 'flip_axis': (0,)}, >>> {'rot_k': 2, 'flip_axis': (0,)}, >>> {'rot_k': 3, 'flip_axis': (0,)}, >>> # The rest of these dont result in any different data, but we need to test them >>> {'rot_k': 0, 'flip_axis': (1,)}, >>> {'rot_k': 1, 'flip_axis': (1,)}, >>> {'rot_k': 2, 'flip_axis': (1,)}, >>> {'rot_k': 3, 'flip_axis': (1,)}, >>> {'rot_k': 0, 'flip_axis': (0, 1)}, >>> {'rot_k': 1, 'flip_axis': (0, 1)}, >>> {'rot_k': 2, 'flip_axis': (0, 1)}, >>> {'rot_k': 3, 'flip_axis': (0, 1)}, >>> ] >>> results = [] >>> for params in fliprot_params: >>> tf = kwimage.Affine.fliprot(canvas_dsize=canvas_dsize, **params) >>> annot2 = annot.warp(tf) >>> annot3 = annot2.warp(tf.inv()) >>> #annot3 = inv_fliprot_annot(annot2, canvas_dsize=canvas_dsize, **params) >>> results.append({ >>> 'annot2': annot2, >>> 'annot3': annot3, >>> 'params': params, >>> 'tf': tf, >>> 'canvas_dsize': canvas_dsize, >>> }) >>> box = kwimage.Box.coerce([0, 0, W, H], format='xywh') >>> for result in results: >>> params = result['params'] >>> warped = box.warp(result['tf']) >>> print('---') >>> print('params = {}'.format(ub.urepr(params, nl=1))) >>> print('box = {}'.format(ub.urepr(box, nl=1))) >>> print('warped = {}'.format(ub.urepr(warped, nl=1))) >>> print(ub.hzcat(['tf = ', ub.urepr(result['tf'], nl=1)]))

>>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> S = max(W, H) >>> image1 = kwimage.grab_test_image('astro', dsize=(S, S))[:H, :W] >>> pnum_ = kwplot.PlotNums(nCols=4, nSubplots=len(results)) >>> for result in results: >>> #image2 = kwimage.warp_affine(image1.copy(), result['tf'], dsize=(S, S)) # fixme dsize=positive should work here >>> image2 = kwimage.warp_affine(image1.copy(), result['tf'], dsize='positive') # fixme dsize=positive should work here >>> #image3 = kwimage.warp_affine(image2.copy(), result['tf'].inv(), dsize=(S, S)) >>> image3 = kwimage.warp_affine(image2.copy(), result['tf'].inv(), dsize='positive') >>> annot2 = result['annot2'] >>> annot3 = result['annot3'] >>> canvas1 = annot1.draw_on(image1.copy(), edgecolor='kitware_blue', fill=False) >>> canvas2 = annot2.draw_on(image2.copy(), edgecolor='kitware_green', fill=False) >>> canvas3 = annot3.draw_on(image3.copy(), edgecolor='kitware_red', fill=False) >>> canvas = kwimage.stack_images([canvas1, canvas2, canvas3], axis=1, pad=10, bg_value='green') >>> kwplot.imshow(canvas, pnum=pnum_(), title=ub.urepr(result['params'], nl=0, compact=1, nobr=1)) >>> kwplot.show_if_requested()

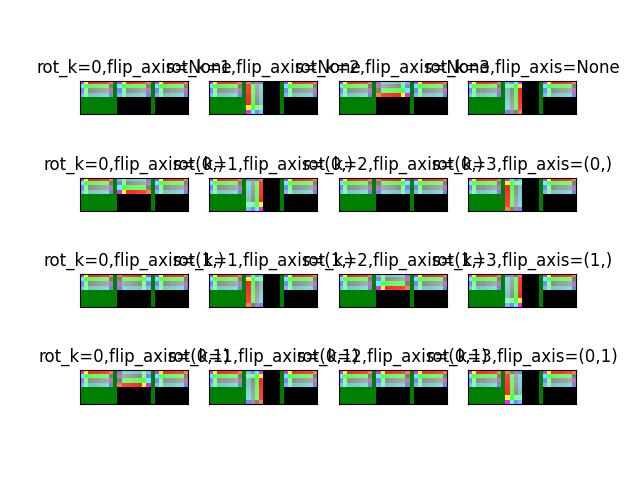

Example

>>> # Second similar test with a very small image to catch small errors >>> import kwimage >>> H, W = 4, 8 >>> canvas_dsize = (W, H) >>> box1 = kwimage.Boxes.random(1).scale((W, H)).quantize() >>> ltrb = box1.data >>> rot_k = 4 >>> annot = box1 >>> annot = box1.to_polygons()[0] >>> annot1 = annot.copy() >>> # The first 8 are the cannonically unique group elements >>> fliprot_params = [ >>> {'rot_k': 0, 'flip_axis': None}, >>> {'rot_k': 1, 'flip_axis': None}, >>> {'rot_k': 2, 'flip_axis': None}, >>> {'rot_k': 3, 'flip_axis': None}, >>> {'rot_k': 0, 'flip_axis': (0,)}, >>> {'rot_k': 1, 'flip_axis': (0,)}, >>> {'rot_k': 2, 'flip_axis': (0,)}, >>> {'rot_k': 3, 'flip_axis': (0,)}, >>> # The rest of these dont result in any different data, but we need to test them >>> {'rot_k': 0, 'flip_axis': (1,)}, >>> {'rot_k': 1, 'flip_axis': (1,)}, >>> {'rot_k': 2, 'flip_axis': (1,)}, >>> {'rot_k': 3, 'flip_axis': (1,)}, >>> {'rot_k': 0, 'flip_axis': (0, 1)}, >>> {'rot_k': 1, 'flip_axis': (0, 1)}, >>> {'rot_k': 2, 'flip_axis': (0, 1)}, >>> {'rot_k': 3, 'flip_axis': (0, 1)}, >>> ] >>> results = [] >>> for params in fliprot_params: >>> tf = kwimage.Affine.fliprot(canvas_dsize=canvas_dsize, **params) >>> annot2 = annot.warp(tf) >>> annot3 = annot2.warp(tf.inv()) >>> #annot3 = inv_fliprot_annot(annot2, canvas_dsize=canvas_dsize, **params) >>> results.append({ >>> 'annot2': annot2, >>> 'annot3': annot3, >>> 'params': params, >>> 'tf': tf, >>> 'canvas_dsize': canvas_dsize, >>> }) >>> box = kwimage.Box.coerce([0, 0, W, H], format='xywh') >>> print('box = {}'.format(ub.urepr(box, nl=1))) >>> for result in results: >>> params = result['params'] >>> warped = box.warp(result['tf']) >>> print('---') >>> print('params = {}'.format(ub.urepr(params, nl=1))) >>> print('warped = {}'.format(ub.urepr(warped, nl=1))) >>> print(ub.hzcat(['tf = ', ub.urepr(result['tf'], nl=1)]))

>>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> S = max(W, H) >>> image1 = np.linspace(.1, .9, W * H).reshape((H, W)) >>> image1 = kwimage.atleast_3channels(image1) >>> image1[0, :, 0] = 1 >>> image1[:, 0, 2] = 1 >>> image1[1, :, 1] = 1 >>> image1[:, 1, 1] = 1 >>> image1[3, :, 0] = 0.5 >>> image1[:, 7, 1] = 0.5 >>> pnum_ = kwplot.PlotNums(nCols=4, nSubplots=len(results)) >>> # NOTE: setting new_dsize='positive' illustrates an issuew with >>> # the pixel interpretation. >>> new_dsize = (S, S) >>> #new_dsize = 'positive' >>> for result in results: >>> image2 = kwimage.warp_affine(image1.copy(), result['tf'], dsize=new_dsize) >>> image3 = kwimage.warp_affine(image2.copy(), result['tf'].inv(), dsize=new_dsize) >>> annot2 = result['annot2'] >>> annot3 = result['annot3'] >>> #canvas1 = annot1.draw_on(image1.copy(), edgecolor='kitware_blue', fill=False) >>> #canvas2 = annot2.draw_on(image2.copy(), edgecolor='kitware_green', fill=False) >>> #canvas3 = annot3.draw_on(image3.copy(), edgecolor='kitware_red', fill=False) >>> canvas = kwimage.stack_images([image1, image2, image3], axis=1, pad=1, bg_value='green') >>> kwplot.imshow(canvas, pnum=pnum_(), title=ub.urepr(result['params'], nl=0, compact=1, nobr=1)) >>> kwplot.show_if_requested()

- class kwimage.Box(boxes, _check: bool = False)[source]¶

Bases:

NiceReprRepresents a single Box.

This is a convinience class. For multiple boxes use kwimage.Boxes, which is more efficient.

Currently implemented by storing a Boxes object with one item and indexing into it. This implementation could be done more efficiently.

- SeeAlso:

Example

>>> from kwimage.structs.single_box import * # NOQA >>> box = Box.random() >>> print(f'box={box}') >>> # >>> box.scale(10).quantize().to_slice() >>> # >>> sl = (slice(0, 10), slice(0, 30)) >>> box = Box.from_slice(sl) >>> print(f'box={box}')

- property format¶

- property data¶

- classmethod from_slice(slice_, shape=None, clip=True, endpoint=True, wrap=False)[source]¶

Example

>>> import kwimage >>> slice_ = kwimage.Box.random().scale(10).quantize().to_slice() >>> new = kwimage.Box.from_slice(slice_)

- property dsize¶

- intersection(other)[source]¶

Example

>>> import kwimage >>> self = kwimage.Box.coerce([0, 0, 10, 10], 'xywh') >>> other = kwimage.Box.coerce([3, 3, 10, 10], 'xywh') >>> print(str(self.intersection(other))) <Box(ltrb, [3, 3, 10, 10])>

- union_hull(other)[source]¶

Example

>>> import kwimage >>> self = kwimage.Box.coerce([0, 0, 10, 10], 'xywh') >>> other = kwimage.Box.coerce([3, 3, 10, 10], 'xywh') >>> print(str(self.union_hull(other))) <Box(ltrb, [0, 0, 13, 13])>

- to_ltrb(*args, **kwargs)[source]¶

Example

>>> import kwimage >>> self = kwimage.Box.random().to_ltrb() >>> assert self.format == 'ltrb'

- to_xywh(*args, **kwargs)[source]¶

Example

>>> import kwimage >>> self = kwimage.Box.random().to_xywh() >>> assert self.format == 'xywh'

- to_cxywh(*args, **kwargs)[source]¶

Example

>>> import kwimage >>> self = kwimage.Box.random().to_cxywh() >>> assert self.format == 'cxywh'

- corners(*args, **kwargs)[source]¶

Example

>>> import kwimage >>> assert kwimage.Box.random().corners().shape == (4, 2)

- property aspect_ratio¶

Example:

>>> import kwimage >>> assert not ub.iterable(kwimage.Box.random().aspect_ratio)

- property center¶

Example:

>>> import kwimage >>> assert len(kwimage.Box.random().center) == 2

- property center_x¶

Example:

>>> import kwimage >>> assert not ub.iterable(kwimage.Box.random().center_x)

- property center_y¶

Example:

>>> import kwimage >>> assert not ub.iterable(kwimage.Box.random().center_y)

- property width¶

Example:

>>> import kwimage >>> assert not ub.iterable(kwimage.Box.random().width)

- property height¶

Example:

>>> import kwimage >>> assert not ub.iterable(kwimage.Box.random().height)

- property tl_x¶

Example:

>>> import kwimage >>> assert not ub.iterable(kwimage.Box.random().tl_x)

- property tl_y¶

Example:

>>> import kwimage >>> assert not ub.iterable(kwimage.Box.random().tl_y)

- property br_x¶

Example:

>>> import kwimage >>> assert not ub.iterable(kwimage.Box.random().br_y)

- property br_y¶

Example:

>>> import kwimage >>> assert not ub.iterable(kwimage.Box.random().br_y)

- property dtype¶

- property area¶

- to_slice(endpoint=True)[source]¶

Example

>>> import kwimage >>> kwimage.Box.random(rng=0).scale(10).quantize().to_slice() (slice(5, 8, None), slice(5, 7, None))

- draw_on(image=None, color='blue', alpha=None, label=None, copy=False, thickness=2, label_loc='top_left')[source]¶

Draws a box directly on an image using OpenCV

Example

>>> import kwimage >>> self = kwimage.Box.random(scale=256, rng=10, format='ltrb') >>> canvas = np.zeros((256, 256, 3), dtype=np.uint8) >>> image = self.draw_on(canvas) >>> # xdoctest: +REQUIRES(--show) >>> # xdoctest: +REQUIRES(module:kwplot) >>> import kwplot >>> kwplot.figure(fnum=2000, doclf=True) >>> kwplot.autompl() >>> kwplot.imshow(image) >>> kwplot.show_if_requested()

- draw(color='blue', alpha=None, label=None, centers=False, fill=False, lw=2, ax=None, setlim=False, **kwargs)[source]¶

Draws a box directly on an image using OpenCV

Example

>>> # xdoctest: +REQUIRES(module:kwplot) >>> import kwimage >>> self = kwimage.Box.random(scale=512.0, rng=0, format='ltrb') >>> self.translate((-128, -128), inplace=True) >>> #image = (np.random.rand(256, 256) * 255).astype(np.uint8) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> fig = kwplot.figure(fnum=1, doclf=True) >>> #kwplot.imshow(image) >>> # xdoctest: +REQUIRES(--show) >>> self.draw(color='blue', setlim=1.2) >>> # xdoctest: +REQUIRES(--show) >>> for o in fig.findobj(): # http://matplotlib.1069221.n5.nabble.com/How-to-turn-off-all-clipping-td1813.html >>> o.set_clip_on(False) >>> kwplot.show_if_requested()

- class kwimage.Boxes(data, format=None, check=True)[source]¶

Bases:

_BoxConversionMixins,_BoxPropertyMixins,_BoxTransformMixins,_BoxDrawMixins,NiceReprConverts boxes between different formats as long as the last dimension contains 4 coordinates and the format is specified.

This is a convinience class, and should not not store the data for very long. The general idiom should be create class, convert data, and then get the raw data and let the class be garbage collected. This will help ensure that your code is portable and understandable if this class is not available.

This class is meant to efficiently store and manipulate multiple boxes. In the case of a single box the

kwimage.structs.single_box.Boxclass can be used instead.Example

>>> # xdoctest: +IGNORE_WHITESPACE >>> import kwimage >>> import numpy as np >>> # Given an array / tensor that represents one or more boxes >>> data = np.array([[ 0, 0, 10, 10], >>> [ 5, 5, 50, 50], >>> [20, 0, 30, 10]]) >>> # The kwimage.Boxes data structure is a thin fast wrapper >>> # that provides methods for operating on the boxes. >>> # It requires that the user explicitly provide a code that denotes >>> # the format of the boxes (i.e. what each column represents) >>> boxes = kwimage.Boxes(data, 'ltrb') >>> # This means that there is no ambiguity about box format >>> # The representation string of the Boxes object demonstrates this >>> print('boxes = {!r}'.format(boxes)) boxes = <Boxes(ltrb, array([[ 0, 0, 10, 10], [ 5, 5, 50, 50], [20, 0, 30, 10]]))> >>> # if you pass this data around. You can convert to other formats >>> # For docs on available format codes see :class:`BoxFormat`. >>> # In this example we will convert (left, top, right, bottom) >>> # to (left-x, top-y, width, height). >>> boxes.toformat('xywh') <Boxes(xywh, array([[ 0, 0, 10, 10], [ 5, 5, 45, 45], [20, 0, 10, 10]]))> >>> # In addition to format conversion there are other operations >>> # We can quickly (using a C-backend) find IoUs >>> ious = boxes.ious(boxes) >>> print('{}'.format(ub.urepr(ious, nl=1, precision=2, with_dtype=False))) np.array([[1. , 0.01, 0. ], [0.01, 1. , 0.02], [0. , 0.02, 1. ]]) >>> # We can ask for the area of each box >>> print('boxes.area = {}'.format(ub.urepr(boxes.area, nl=0, with_dtype=False))) boxes.area = np.array([[ 100],[2025],[ 100]]) >>> # We can ask for the center of each box >>> print('boxes.center = {}'.format(ub.urepr(boxes.center, nl=1, with_dtype=False))) boxes.center = ( np.array([[ 5. ],[27.5],[25. ]]), np.array([[ 5. ],[27.5],[ 5. ]]), ) >>> # We can translate / scale the boxes >>> boxes.translate((10, 10)).scale(100) <Boxes(ltrb, array([[1000., 1000., 2000., 2000.], [1500., 1500., 6000., 6000.], [3000., 1000., 4000., 2000.]]))> >>> # We can clip the bounding boxes >>> boxes.translate((10, 10)).scale(100).clip(1200, 1200, 1700, 1800) <Boxes(ltrb, array([[1200., 1200., 1700., 1800.], [1500., 1500., 1700., 1800.], [1700., 1200., 1700., 1800.]]))> >>> # We can perform arbitrary warping of the boxes >>> # (note that if the transform is not axis aligned, the axis aligned >>> # bounding box of the transform result will be returned) >>> transform = np.array([[-0.83907153, 0.54402111, 0. ], >>> [-0.54402111, -0.83907153, 0. ], >>> [ 0. , 0. , 1. ]]) >>> boxes.warp(transform) <Boxes(ltrb, array([[ -8.3907153 , -13.8309264 , 5.4402111 , 0. ], [-39.23347095, -69.154632 , 23.00569785, -6.9154632 ], [-25.1721459 , -24.7113486 , -11.3412195 , -10.8804222 ]]))> >>> # Note, that we can transform the box to a Polygon for more >>> # accurate warping. >>> transform = np.array([[-0.83907153, 0.54402111, 0. ], >>> [-0.54402111, -0.83907153, 0. ], >>> [ 0. , 0. , 1. ]]) >>> warped_polys = boxes.to_polygons().warp(transform) >>> print(ub.urepr(warped_polys.data, sv=1)) [ <Polygon({ 'exterior': <Coords(data= array([[ 0. , 0. ], [ 5.4402111, -8.3907153], [ -2.9505042, -13.8309264], [ -8.3907153, -5.4402111], [ 0. , 0. ]]))>, 'interiors': [], })>, <Polygon({ 'exterior': <Coords(data= array([[ -1.4752521 , -6.9154632 ], [ 23.00569785, -44.67368205], [-14.752521 , -69.154632 ], [-39.23347095, -31.39641315], [ -1.4752521 , -6.9154632 ]]))>, 'interiors': [], })>, <Polygon({ 'exterior': <Coords(data= array([[-16.7814306, -10.8804222], [-11.3412195, -19.2711375], [-19.7319348, -24.7113486], [-25.1721459, -16.3206333], [-16.7814306, -10.8804222]]))>, 'interiors': [], })>, ] >>> # The kwimage.Boxes data structure is also convertable to >>> # several alternative data structures, like shapely, coco, and imgaug. >>> print(ub.urepr(boxes.to_shapely(), sv=1)) [ POLYGON ((0 0, 0 10, 10 10, 10 0, 0 0)), POLYGON ((5 5, 5 50, 50 50, 50 5, 5 5)), POLYGON ((20 0, 20 10, 30 10, 30 0, 20 0)), ] >>> # xdoctest: +REQUIRES(module:imgaug) >>> print(ub.urepr(boxes[0:1].to_imgaug(shape=(100, 100)), sv=1)) BoundingBoxesOnImage([BoundingBox(x1=0.0000, y1=0.0000, x2=10.0000, y2=10.0000, label=None)], shape=(100, 100)) >>> # xdoctest: -REQUIRES(module:imgaug) >>> print(ub.urepr(list(boxes.to_coco()), sv=1)) [ [0, 0, 10, 10], [5, 5, 45, 45], [20, 0, 10, 10], ] >>> # Finally, when you are done with your boxes object, you can >>> # unwrap the raw data by using the ``.data`` attribute >>> # all operations are done on this data, which gives the >>> # kwiamge.Boxes data structure almost no overhead when >>> # inserted into existing code. >>> print('boxes.data =\n{}'.format(ub.urepr(boxes.data, nl=1))) boxes.data = np.array([[ 0, 0, 10, 10], [ 5, 5, 50, 50], [20, 0, 30, 10]], dtype=...) >>> # xdoctest: +REQUIRES(module:torch) >>> # This data structure was designed for use with both torch >>> # and numpy, the underlying data can be either an array or tensor. >>> boxes.tensor() <Boxes(ltrb, tensor([[ 0, 0, 10, 10], [ 5, 5, 50, 50], [20, 0, 30, 10]]...))> >>> boxes.numpy() <Boxes(ltrb, array([[ 0, 0, 10, 10], [ 5, 5, 50, 50], [20, 0, 30, 10]]))>

Example

>>> # xdoctest: +IGNORE_WHITESPACE >>> from kwimage.structs.boxes import * # NOQA >>> # Demo of conversion methods >>> import kwimage >>> kwimage.Boxes([[25, 30, 15, 10]], 'xywh') <Boxes(xywh, array([[25, 30, 15, 10]]))> >>> kwimage.Boxes([[25, 30, 15, 10]], 'xywh').to_xywh() <Boxes(xywh, array([[25, 30, 15, 10]]))> >>> kwimage.Boxes([[25, 30, 15, 10]], 'xywh').to_cxywh() <Boxes(cxywh, array([[32.5, 35. , 15. , 10. ]]))> >>> kwimage.Boxes([[25, 30, 15, 10]], 'xywh').to_ltrb() <Boxes(ltrb, array([[25, 30, 40, 40]]))> >>> kwimage.Boxes([[25, 30, 15, 10]], 'xywh').scale(2).to_ltrb() <Boxes(ltrb, array([[50., 60., 80., 80.]]))> >>> # xdoctest: +REQUIRES(module:torch) >>> import torch >>> kwimage.Boxes(torch.FloatTensor([[25, 30, 15, 20]]), 'xywh').scale(.1).to_ltrb() <Boxes(ltrb, tensor([[ 2.5000, 3.0000, 4.0000, 5.0000]]))>

Note

In the following examples we show cases where

Boxescan hold a single 1-dimensional box array. This is a holdover from an older codebase, and some functions may assume that the input is at least 2-D. Thus when representing a single bounding box it is best practice to view it as a list of 1 box. While many function will work in the 1-D case, not all functions have been tested and thus we cannot gaurentee correctness.Example

>>> # xdoctest: +IGNORE_WHITESPACE >>> Boxes([25, 30, 15, 10], 'xywh') <Boxes(xywh, array([25, 30, 15, 10]))> >>> Boxes([25, 30, 15, 10], 'xywh').to_xywh() <Boxes(xywh, array([25, 30, 15, 10]))> >>> Boxes([25, 30, 15, 10], 'xywh').to_cxywh() <Boxes(cxywh, array([32.5, 35. , 15. , 10. ]))> >>> Boxes([25, 30, 15, 10], 'xywh').to_ltrb() <Boxes(ltrb, array([25, 30, 40, 40]))> >>> Boxes([25, 30, 15, 10], 'xywh').scale(2).to_ltrb() <Boxes(ltrb, array([50., 60., 80., 80.]))> >>> # xdoctest: +REQUIRES(module:torch) >>> import torch >>> Boxes(torch.FloatTensor([[25, 30, 15, 20]]), 'xywh').scale(.1).to_ltrb() <Boxes(ltrb, tensor([[ 2.5000, 3.0000, 4.0000, 5.0000]]))>

Example

>>> datas = [ >>> [1, 2, 3, 4], >>> [[1, 2, 3, 4], [4, 5, 6, 7]], >>> [[[1, 2, 3, 4], [4, 5, 6, 7]]], >>> ] >>> formats = BoxFormat.cannonical >>> for format1 in formats: >>> for data in datas: >>> self = box1 = Boxes(data, format1) >>> for format2 in formats: >>> box2 = box1.toformat(format2) >>> back = box2.toformat(format1) >>> assert box1 == back

- Parameters:

data (ndarray | Tensor | Boxes) – Either an ndarray or Tensor with trailing shape of 4, or an existing Boxes object.

format (str) – format code indicating which coordinates are represented by data. If data is a Boxes object then this is not necessary.

check (bool) – if True runs input checks on raw data.

- Raises:

ValueError – if data is specified without a format

- classmethod random(num=1, scale=1.0, format='xywh', anchors=None, anchor_std=0.16666666666666666, tensor=False, rng=None)[source]¶

Makes random boxes; typically for testing purposes

- Parameters:

num (int) – number of boxes to generate

scale (float | Tuple[float, float]) – size of imgdims

format (str) – format of boxes to be created (e.g. ltrb, xywh)

anchors (ndarray) – normalized width / heights of anchor boxes to perterb and randomly place. (must be in range 0-1)

anchor_std (float) – magnitude of noise applied to anchor shapes

tensor (bool) – if True, returns boxes in tensor format

rng (None | int | RandomState) – initial random seed

- Returns:

random boxes

- Return type:

Example

>>> # xdoctest: +IGNORE_WHITESPACE >>> Boxes.random(3, rng=0, scale=100) <Boxes(xywh, array([[54, 54, 6, 17], [42, 64, 1, 25], [79, 38, 17, 14]]...))> >>> # xdoctest: +REQUIRES(module:torch) >>> Boxes.random(3, rng=0, scale=100).tensor() <Boxes(xywh, tensor([[ 54, 54, 6, 17], [ 42, 64, 1, 25], [ 79, 38, 17, 14]]...))> >>> anchors = np.array([[.5, .5], [.3, .3]]) >>> Boxes.random(3, rng=0, scale=100, anchors=anchors) <Boxes(xywh, array([[ 2, 13, 51, 51], [32, 51, 32, 36], [36, 28, 23, 26]]...))>

Example

>>> # Boxes position/shape within 0-1 space should be uniform. >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> fig = kwplot.figure(fnum=1, doclf=True) >>> fig.gca().set_xlim(0, 128) >>> fig.gca().set_ylim(0, 128) >>> import kwimage >>> kwimage.Boxes.random(num=10).scale(128).draw()

- classmethod concatenate(boxes, axis=0)[source]¶

Concatenates multiple boxes together

- Parameters:

boxes (Sequence[Boxes]) – list of boxes to concatenate

axis (int) – axis to stack on. Defaults to 0.

- Returns:

stacked boxes

- Return type:

Example

>>> boxes = [Boxes.random(3) for _ in range(3)] >>> new = Boxes.concatenate(boxes) >>> assert len(new) == 9 >>> assert np.all(new.data[3:6] == boxes[1].data)

Example

>>> boxes = [Boxes.random(3) for _ in range(3)] >>> boxes[0].data = boxes[0].data[0] >>> boxes[1].data = boxes[0].data[0:0] >>> new = Boxes.concatenate(boxes) >>> assert len(new) == 4 >>> # xdoctest: +REQUIRES(module:torch) >>> new = Boxes.concatenate([b.tensor() for b in boxes]) >>> assert len(new) == 4

- compress(flags, axis=0, inplace=False)[source]¶

Filters boxes based on a boolean criterion

- Parameters:

flags (ArrayLike) – true for items to be kept. Extended type: ArrayLike[bool]

axis (int) – you usually want this to be 0

inplace (bool) – if True, modifies this object

- Returns:

the boxes corresponding to where flags were true

- Return type:

Example

>>> self = Boxes([[25, 30, 15, 10]], 'ltrb') >>> self.compress([True]) <Boxes(ltrb, array([[25, 30, 15, 10]]))> >>> self.compress([False]) <Boxes(ltrb, array([], shape=(0, 4), dtype=...))>

- take(idxs, axis=0, inplace=False)[source]¶

Takes a subset of items at specific indices

- Parameters:

indices (ArrayLike) – Indexes of items to take. Extended type ArrayLike[int].

axis (int) – you usually want this to be 0

inplace (bool) – if True, modifies this object

- Returns:

the boxes corresponding to the specified indices

- Return type:

Example

>>> self = Boxes([[25, 30, 15, 10]], 'ltrb') >>> self.take([0]) <Boxes(ltrb, array([[25, 30, 15, 10]]))> >>> self.take([]) <Boxes(ltrb, array([], shape=(0, 4), dtype=...))>

- is_tensor()[source]¶

is the backend fueled by torch?

- Returns:

True if the Boxes are torch tensors

- Return type:

- is_numpy()[source]¶

is the backend fueled by numpy?

- Returns:

True if the Boxes are numpy arrays

- Return type:

- property _impl¶

returns the kwarray.ArrayAPI implementation for the data

- Returns:

the array API for the box backend

- Return type:

Example

>>> assert Boxes.random().numpy()._impl.is_numpy >>> # xdoctest: +REQUIRES(module:torch) >>> assert Boxes.random().tensor()._impl.is_tensor

- property device¶

If the backend is torch returns the data device, otherwise None

- astype(dtype)[source]¶

Changes the type of the internal array used to represent the boxes

Note

this operation is not inplace

- Returns:

the boxes with the chosen type

- Return type:

Example

>>> # xdoctest: +IGNORE_WHITESPACE >>> # xdoctest: +REQUIRES(module:torch) >>> Boxes.random(3, 100, rng=0).tensor().astype('int16') <Boxes(xywh, tensor([[54, 54, 6, 17], [42, 64, 1, 25], [79, 38, 17, 14]], dtype=torch.int16))> >>> Boxes.random(3, 100, rng=0).numpy().astype('int16') <Boxes(xywh, array([[54, 54, 6, 17], [42, 64, 1, 25], [79, 38, 17, 14]], dtype=int16))> >>> Boxes.random(3, 100, rng=0).tensor().astype('float32') >>> Boxes.random(3, 100, rng=0).numpy().astype('float32')

- round(inplace=False)[source]¶

Rounds data coordinates to the nearest integer.

This operation is applied directly to the box coordinates, so its output will depend on the format the boxes are stored in.

- Parameters:

inplace (bool) – if True, modifies this object. Defaults to False.

- Returns:

the boxes with rounded coordinates

- Return type:

- SeeAlso:

Example

>>> import kwimage >>> self = kwimage.Boxes.random(3, rng=0).scale(10) >>> new = self.round() >>> print('self = {!r}'.format(self)) >>> print('new = {!r}'.format(new)) self = <Boxes(xywh, array([[5.48813522, 5.44883192, 0.53949833, 1.70306146], [4.23654795, 6.4589411 , 0.13932407, 2.45878875], [7.91725039, 3.83441508, 1.71937704, 1.45453393]]))> new = <Boxes(xywh, array([[5., 5., 1., 2.], [4., 6., 0., 2.], [8., 4., 2., 1.]]))>

- quantize(inplace=False, dtype=<class 'numpy.int32'>)[source]¶

Converts the box to integer coordinates.

This operation takes the floor of the left side and the ceil of the right side. Thus the area of the box will never decreases. But this will often increase the width / height of the box by a pixel.

- Parameters:

inplace (bool) – if True, modifies this object

dtype (type) – type to cast as

- Returns:

the boxes with quantized coordinates

- Return type:

- SeeAlso:

Boxes.round()Boxes.resize()if you need to ensure the size does not change

Example

>>> import kwimage >>> self = kwimage.Boxes.random(3, rng=0).scale(10) >>> new = self.quantize() >>> print('self = {!r}'.format(self)) >>> print('new = {!r}'.format(new)) self = <Boxes(xywh, array([[5.48813522, 5.44883192, 0.53949833, 1.70306146], [4.23654795, 6.4589411 , 0.13932407, 2.45878875], [7.91725039, 3.83441508, 1.71937704, 1.45453393]]))> new = <Boxes(xywh, array([[5, 5, 2, 3], [4, 6, 1, 3], [7, 3, 3, 3]]...))>

Example

>>> import kwimage >>> # Be careful if it is important to preserve the width/height >>> self = kwimage.Boxes([[0, 0, 10, 10]], 'xywh') >>> aff = kwimage.Affine.coerce(offset=(0.5, 0.0)) >>> warped = self.warp(aff) >>> new = warped.quantize(dtype=int) >>> print('self = {!r}'.format(self)) >>> print('warped = {!r}'.format(warped)) >>> print('new = {!r}'.format(new)) self = <Boxes(xywh, array([[ 0, 0, 10, 10]]))> warped = <Boxes(xywh, array([[ 0.5, 0. , 10. , 10. ]]))> new = <Boxes(xywh, array([[ 0, 0, 11, 10]]))>

Example

>>> import kwimage >>> self = kwimage.Boxes.random(3, rng=0) >>> orig = self.copy() >>> self.quantize(inplace=True) >>> assert np.any(self.data != orig.data)

- numpy()[source]¶

Converts tensors to numpy. Does not change memory if possible.

- Returns:

the boxes with a numpy backend

- Return type:

Example

>>> # xdoctest: +REQUIRES(module:torch) >>> self = Boxes.random(3).tensor() >>> newself = self.numpy() >>> self.data[0, 0] = 0 >>> assert newself.data[0, 0] == 0 >>> self.data[0, 0] = 1 >>> assert self.data[0, 0] == 1

- tensor(device=NoParam)[source]¶

Converts numpy to tensors. Does not change memory if possible.

- Parameters:

device (int | None | torch.device) – The torch device to put the backend tensors on

- Returns:

the boxes with a torch backend

- Return type:

Example

>>> # xdoctest: +REQUIRES(module:torch) >>> self = Boxes.random(3) >>> # xdoctest: +REQUIRES(module:torch) >>> newself = self.tensor() >>> self.data[0, 0] = 0 >>> assert newself.data[0, 0] == 0 >>> self.data[0, 0] = 1 >>> assert self.data[0, 0] == 1

- ious(other, bias=0, impl='auto', mode=None)[source]¶

Intersection over union.

Compute IOUs (intersection area over union area) between these boxes and another set of boxes. This is a symmetric measure of similarity between boxes.

Todo

- [ ] Add pairwise flag to toggle between one-vs-one and all-vs-all

computation. I.E. Add option for componentwise calculation.

- Parameters:

other (Boxes) – boxes to compare IoUs against

bias (int) – either 0 or 1, does TL=BR have area of 0 or 1? Defaults to 0.

impl (str) – code to specify implementation used to ious. Can be either torch, py, c, or auto. Efficiency and the exact result will vary by implementation, but they will always be close. Some implementations only accept certain data types (e.g. impl=’c’, only accepts float32 numpy arrays). See ~/code/kwimage/dev/bench_bbox.py for benchmark details. On my system the torch impl was fastest (when the data was on the GPU). Defaults to ‘auto’

mode (str) – depricated, use impl

- Returns:

the ious

- Return type:

ndarray

- SeeAlso:

iooas - for a measure of coverage between boxes

Examples

>>> import kwimage >>> self = kwimage.Boxes(np.array([[ 0, 0, 10, 10], >>> [10, 0, 20, 10], >>> [20, 0, 30, 10]]), 'ltrb') >>> other = kwimage.Boxes(np.array([6, 2, 20, 10]), 'ltrb') >>> overlaps = self.ious(other, bias=1).round(2) >>> assert np.all(np.isclose(overlaps, [0.21, 0.63, 0.04])), repr(overlaps)

Examples

>>> import kwimage >>> boxes1 = kwimage.Boxes(np.array([[ 0, 0, 10, 10], >>> [10, 0, 20, 10], >>> [20, 0, 30, 10]]), 'ltrb') >>> other = kwimage.Boxes(np.array([[6, 2, 20, 10], >>> [100, 200, 300, 300]]), 'ltrb') >>> overlaps = boxes1.ious(other) >>> print('{}'.format(ub.urepr(overlaps, precision=2, nl=1))) np.array([[0.18, 0. ], [0.61, 0. ], [0. , 0. ]]...)

Examples

>>> # xdoctest: +IGNORE_WHITESPACE >>> Boxes(np.empty(0), 'xywh').ious(Boxes(np.empty(4), 'xywh')).shape (0,) >>> #Boxes(np.empty(4), 'xywh').ious(Boxes(np.empty(0), 'xywh')).shape >>> Boxes(np.empty((0, 4)), 'xywh').ious(Boxes(np.empty((0, 4)), 'xywh')).shape (0, 0) >>> Boxes(np.empty((1, 4)), 'xywh').ious(Boxes(np.empty((0, 4)), 'xywh')).shape (1, 0) >>> Boxes(np.empty((0, 4)), 'xywh').ious(Boxes(np.empty((1, 4)), 'xywh')).shape (0, 1)

Examples

>>> # xdoctest: +REQUIRES(module:torch) >>> import torch >>> formats = BoxFormat.cannonical >>> istensors = [False, True] >>> results = {} >>> for format in formats: >>> for tensor in istensors: >>> boxes1 = Boxes.random(5, scale=10.0, rng=0, format=format, tensor=tensor) >>> boxes2 = Boxes.random(7, scale=10.0, rng=1, format=format, tensor=tensor) >>> ious = boxes1.ious(boxes2) >>> results[(format, tensor)] = ious >>> results = {k: v.numpy() if torch.is_tensor(v) else v for k, v in results.items() } >>> results = {k: v.tolist() for k, v in results.items()} >>> print(ub.urepr(results, sk=True, precision=3, nl=2)) >>> from functools import partial >>> assert ub.allsame(results.values(), partial(np.allclose, atol=1e-07))

- iooas(other, bias=0)[source]¶

Intersection over other area.

This is an asymetric measure of coverage. How much of the “other” boxes are covered by these boxes. It is the area of intersection between each pair of boxes and the area of the “other” boxes.

- SeeAlso:

ious - for a measure of similarity between boxes

- Parameters:

other (Boxes) – boxes to compare IoOA against

bias (int) – either 0 or 1, does TL=BR have area of 0 or 1? Defaults to 0.

- Returns:

the iooas

- Return type:

ndarray

Examples

>>> self = Boxes(np.array([[ 0, 0, 10, 10], >>> [10, 0, 20, 10], >>> [20, 0, 30, 10]]), 'ltrb') >>> other = Boxes(np.array([[6, 2, 20, 10], [0, 0, 0, 3]]), 'xywh') >>> coverage = self.iooas(other, bias=0).round(2) >>> print('coverage = {!r}'.format(coverage))

- isect_area(other, bias=0)[source]¶

Intersection part of intersection over union computation

- Parameters:

other (Boxes) – boxes to compare IoOA against

bias (int) – either 0 or 1, does TL=BR have area of 0 or 1? Defaults to 0.

- Returns:

the iooas

- Return type:

ndarray

Examples

>>> # xdoctest: +IGNORE_WHITESPACE >>> self = Boxes.random(5, scale=10.0, rng=0, format='ltrb') >>> other = Boxes.random(3, scale=10.0, rng=1, format='ltrb') >>> isect = self.isect_area(other, bias=0) >>> ious_v1 = isect / ((self.area + other.area.T) - isect) >>> ious_v2 = self.ious(other, bias=0) >>> assert np.allclose(ious_v1, ious_v2)

- intersection(other)[source]¶

Componentwise intersection between two sets of Boxes

intersections of boxes are always boxes, so this works

- Parameters:

other (Boxes) – boxes to intersect with this object. (must be of same length)

- Returns:

the component-wise intersection geometry

- Return type:

Examples

>>> # xdoctest: +IGNORE_WHITESPACE >>> from kwimage.structs.boxes import * # NOQA >>> self = Boxes.random(5, rng=0).scale(10.) >>> other = self.translate(1) >>> new = self.intersection(other) >>> new_area = np.nan_to_num(new.area).ravel() >>> alt_area = np.diag(self.isect_area(other)) >>> close = np.isclose(new_area, alt_area) >>> assert np.all(close)

- union_hull(other)[source]¶

Componentwise bounds of the union between two sets of Boxes

NOTE: convert to polygon to do a real union.

Note

This is not a real hull. A better name for this might be union_bounds because we are returning the axis-aligned bounds of the convex hull of the union.

- Parameters:

other (Boxes) – boxes to union with this object. (must be of same length)

- Returns:

bounding box of the unioned boxes

- Return type:

Examples

>>> # xdoctest: +IGNORE_WHITESPACE >>> from kwimage.structs.boxes import * # NOQA >>> self = Boxes.random(5, rng=0).scale(10.) >>> other = self.translate(1) >>> new = self.union_hull(other) >>> new_area = np.nan_to_num(new.area).ravel()

- bounding_box()[source]¶

Returns the box that bounds all of the contained boxes

- Returns:

a single box

- Return type:

Examples

>>> # xdoctest: +IGNORE_WHITESPACE >>> from kwimage.structs.boxes import * # NOQA >>> self = Boxes.random(5, rng=0).scale(10.) >>> other = self.translate(1) >>> new = self.union_hull(other) >>> new_area = np.nan_to_num(new.area).ravel()

- contains(other)[source]¶

Determine of points are completely contained by these boxes

- Parameters:

other (kwimage.Points) – points to test for containment. TODO: support generic data types

- Returns:

- flags - N x M boolean matrix indicating which box

contains which points, where N is the number of boxes and M is the number of points.

- Return type:

ArrayLike

Examples

>>> import kwimage >>> self = kwimage.Boxes.random(10).scale(10).round() >>> other = kwimage.Points.random(10).scale(10).round() >>> flags = self.contains(other) >>> flags = self.contains(self.xy_center) >>> assert np.all(np.diag(flags))

- view(*shape)[source]¶

Passthrough method to view or reshape

- Parameters:

*shape (Tuple[int, …]) – new shape

- Returns:

data with a different view

- Return type:

Example

>>> # xdoctest: +REQUIRES(module:torch) >>> self = Boxes.random(6, scale=10.0, rng=0, format='xywh').tensor() >>> assert list(self.view(3, 2, 4).data.shape) == [3, 2, 4] >>> self = Boxes.random(6, scale=10.0, rng=0, format='ltrb').tensor() >>> assert list(self.view(3, 2, 4).data.shape) == [3, 2, 4]

- _ensure_nonnegative_extent(inplace=False)[source]¶

Experimental. If the box has a negative width / height make them positive and adjust the tlxy point.

Need a better name for this function.

- FIXME:

- [ ] Slice semantics (i.e. start/stop) of boxes break under

rotations and reflections. This function needs to be thought out a bit more before becoming non-experimental.

- Returns:

Boxes

Example

>>> import kwimage >>> self = kwimage.Boxes(np.array([ >>> [20, 30, -10, -20], >>> [0, 0, 10, 20], >>> [0, 0, -10, 20], >>> [0, 0, 10, -20], >>> ]), 'xywh') >>> new = self._ensure_nonnegative_extent(inplace=0) >>> assert np.any(self.width < 0) >>> assert not np.any(new.width < 0) >>> assert np.any(self.height < 0) >>> assert not np.any(new.height < 0)

>>> import kwimage >>> self = kwimage.Boxes(np.array([ >>> [0, 3, 8, -4], >>> ]), 'xywh') >>> new = self._ensure_nonnegative_extent(inplace=0) >>> print('self = {}'.format(ub.urepr(self, nl=1))) >>> print('new = {}'.format(ub.urepr(new, nl=1))) >>> assert not np.any(self.width < 0) >>> assert not np.any(new.width < 0) >>> assert np.any(self.height < 0) >>> assert not np.any(new.height < 0)

- class kwimage.Color(color, alpha=None, space=None, coerce=True)[source]¶

Bases:

NiceReprUsed for converting a single color between spaces and encodings. This should only be used when handling small numbers of colors(e.g. 1), don’t use this to represent an image.

- Parameters:

space (str) – colorspace of wrapped color. Assume RGB if not specified and it cannot be inferred

CommandLine

xdoctest -m ~/code/kwimage/kwimage/im_color.py Color

Example

>>> print(Color('g')) >>> print(Color('orangered')) >>> print(Color('#AAAAAA').as255()) >>> print(Color([0, 255, 0])) >>> print(Color([1, 1, 1.])) >>> print(Color([1, 1, 1])) >>> print(Color(Color([1, 1, 1])).as255()) >>> print(Color(Color([1., 0, 1, 0])).ashex()) >>> print(Color([1, 1, 1], alpha=255)) >>> print(Color([1, 1, 1], alpha=255, space='lab'))

- Parameters:

color (Color | Iterable[int | float] | str) – something coercable into a color

alpha (float | None) – if psecified adds an alpha value

space (str) – The colorspace to interpret this color as. Defaults to rgb.

coerce (bool) – The exsting init is not lightweight. This is a design problem that will need to be fixed in future versions. Setting coerce=False will disable all magic and use imputed color and space args directly. Alpha will be ignored.

- forimage(image, space='auto')[source]¶

Return a numeric value for this color that can be used in the given image.

Create a numeric color tuple that agrees with the format of the input image (i.e. float or int, with 3 or 4 channels).

- Parameters:

image (ndarray) – image to return color for

space (str) – colorspace of the input image. Defaults to ‘auto’, which will choose rgb or rgba

- Returns:

the color value

- Return type:

Tuple[Number, …]

Example

>>> import kwimage >>> img_f3 = np.zeros([8, 8, 3], dtype=np.float32) >>> img_u3 = np.zeros([8, 8, 3], dtype=np.uint8) >>> img_f4 = np.zeros([8, 8, 4], dtype=np.float32) >>> img_u4 = np.zeros([8, 8, 4], dtype=np.uint8) >>> kwimage.Color('red').forimage(img_f3) (1.0, 0.0, 0.0) >>> kwimage.Color('red').forimage(img_f4) (1.0, 0.0, 0.0, 1.0) >>> kwimage.Color('red').forimage(img_u3) (255, 0, 0) >>> kwimage.Color('red').forimage(img_u4) (255, 0, 0, 255) >>> kwimage.Color('red', alpha=0.5).forimage(img_f4) (1.0, 0.0, 0.0, 0.5) >>> kwimage.Color('red', alpha=0.5).forimage(img_u4) (255, 0, 0, 127) >>> kwimage.Color('red').forimage(np.uint8) (255, 0, 0)

- ashex(space=None)[source]¶

Convert to hex values

- Parameters:

space (None | str) – if specified convert to this colorspace before returning

- Returns:

the hex representation

- Return type:

- as01(space=None)[source]¶

Convert to float values

- Parameters:

space (None | str) – if specified convert to this colorspace before returning

- Returns:

The float tuple of color values between 0 and 1

- Return type:

Tuple[float, float, float] | Tuple[float, float, float, float]

Note

This function is only guarenteed to return 0-1 values for rgb values. For HSV and LAB, the native spaces are used. This is not ideal, and we may create a new function that fixes this - at least conceptually - and deprate this for that in the future.

For HSV, H is between 0 and 360. S, and V are in [0, 1]

- classmethod _is_base255(channels)[source]¶

there is a one corner case where all pixels are 1 or less

- classmethod named_colors()[source]¶

- Returns:

names of colors that Color accepts

- Return type:

List[str]

Example

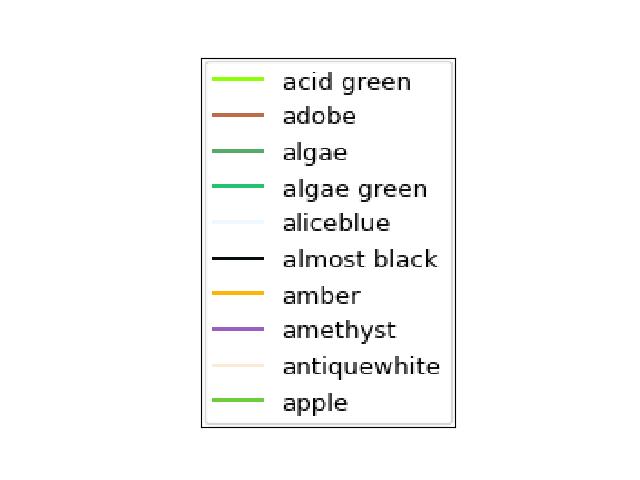

>>> import kwimage >>> named_colors = kwimage.Color.named_colors() >>> color_lut = {name: kwimage.Color(name).as01() for name in named_colors} >>> # xdoctest: +REQUIRES(module:kwplot) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> # This is a very big table if we let it be, reduce it >>> color_lut =dict(list(color_lut.items())[0:10]) >>> canvas = kwplot.make_legend_img(color_lut) >>> kwplot.imshow(canvas)

- classmethod distinct(num, existing=None, space='rgb', legacy='auto', exclude_black=True, exclude_white=True)[source]¶

Make multiple distinct colors.

The legacy variant is based on a stack overflow post [HowToDistinct], but the modern variant is based on the

distinctipypackage.References

[HowToDistinct]https://stackoverflow.com/questions/470690/how-to-automatically-generate-n-distinct-colors

Todo

- [ ] If num is more than a threshold we should switch to

a different strategy to generating colors that just samples uniformly from some colormap and then shuffles. We have no hope of making things distinguishable when num starts going over 10 or so. See [ColorLimits] [WikiDistinguish] [Disinct2] for more ideas.

- Returns:

list of distinct float color values

- Return type:

List[Tuple]

Example

>>> # xdoctest: +REQUIRES(module:matplotlib) >>> from kwimage.im_color import * # NOQA >>> import kwimage >>> colors1 = kwimage.Color.distinct(5, legacy=False) >>> colors2 = kwimage.Color.distinct(3, existing=colors1) >>> # xdoctest: +REQUIRES(module:kwplot) >>> # xdoctest: +REQUIRES(--show) >>> from kwimage.im_color import _draw_color_swatch >>> swatch1 = _draw_color_swatch(colors1, cellshape=9) >>> swatch2 = _draw_color_swatch(colors1 + colors2, cellshape=9) >>> import kwplot >>> kwplot.autompl() >>> kwplot.imshow(swatch1, pnum=(1, 2, 1), fnum=1) >>> kwplot.imshow(swatch2, pnum=(1, 2, 2), fnum=1) >>> kwplot.show_if_requested()

- distance(other, space='lab')[source]¶

Distance between self an another color

- Parameters:

other (Color) – the color to compare

space (str) – the colorspace to comapre in

- Returns:

float

- interpolate(other, alpha=0.5, ispace=None, ospace=None)[source]¶

Interpolate between colors

- Parameters:

other (Color) – A coercable Color

alpha (float | List[float]) – one or more interpolation values

ispace (str | None) – colorspace to interpolate in

ospace (str | None) – colorspace of returned color

- Returns:

Color | List[Color]

Example

>>> import kwimage >>> color1 = self = kwimage.Color.coerce('orangered') >>> color2 = other = kwimage.Color.coerce('dodgerblue') >>> alpha = np.linspace(0, 1, 6) >>> ispace = 'rgb' >>> ospace = 'rgb' >>> colorBs = self.interpolate(other, alpha, ispace=ispace, ospace=ospace) >>> # xdoctest: +REQUIRES(module:kwplot) >>> # xdoctest: +REQUIRES(--show) >>> from kwimage.im_color import _draw_color_swatch >>> swatch_colors = [color1] + colorBs + [color2] >>> print('swatch_colors = {}'.format(ub.urepr(swatch_colors, nl=1))) >>> swatch1 = _draw_color_swatch(swatch_colors, cellshape=(8, 8)) >>> import kwplot >>> kwplot.autompl() >>> kwplot.imshow(swatch1, pnum=(1, 1, 1), fnum=1) >>> kwplot.show_if_requested()

- to_image(dsize=(8, 8))[source]¶

Create an solid-color image with this color

- Parameters:

dsize (Tuple[int, int]) – the desired width / height of the image (defaults to 8x8)

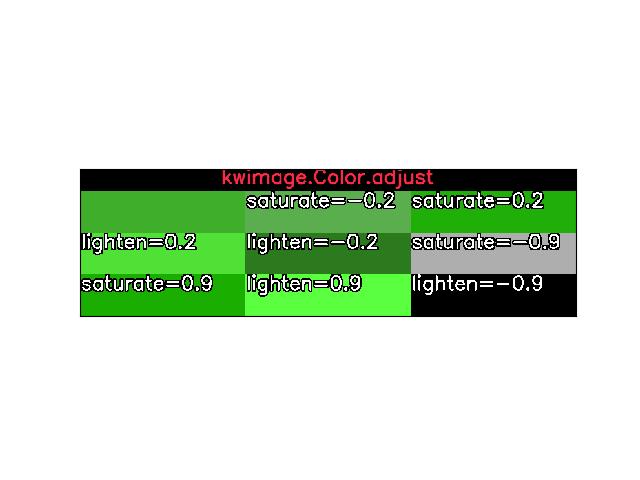

- adjust(saturate=0, lighten=0)[source]¶

Adjust the saturation or value of a color.

Requires that

colormathis installed.- Parameters:

saturate (float) – between +1 and -1, when positive saturates the color, when negative desaturates the color.

lighten (float) – between +1 and -1, when positive lightens the color, when negative darkens the color.

Example

>>> # xdoctest: +REQUIRES(module:colormath) >>> import kwimage >>> self = kwimage.Color.coerce('salmon') >>> new = self.adjust(saturate=+0.2) >>> cell1 = self.to_image() >>> cell2 = new.to_image() >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> canvas = kwimage.stack_images([cell1, cell2], axis=1) >>> kwplot.imshow(canvas)

Example

>>> # xdoctest: +REQUIRES(module:colormath) >>> import kwimage >>> self = kwimage.Color.coerce('salmon', alpha=0.5) >>> new = self.adjust(saturate=+0.2) >>> cell1 = self.to_image() >>> cell2 = new.to_image() >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> canvas = kwimage.stack_images([cell1, cell2], axis=1) >>> kwplot.imshow(canvas)

Example

>>> # xdoctest: +REQUIRES(module:colormath) >>> import kwimage >>> adjustments = [ >>> {'saturate': -0.2}, >>> {'saturate': +0.2}, >>> {'lighten': +0.2}, >>> {'lighten': -0.2}, >>> {'saturate': -0.9}, >>> {'saturate': +0.9}, >>> {'lighten': +0.9}, >>> {'lighten': -0.9}, >>> ] >>> self = kwimage.Color.coerce('kitware_green') >>> dsize = (256, 64) >>> to_show = [] >>> to_show.append(self.to_image(dsize)) >>> for kwargs in adjustments: >>> new = self.adjust(**kwargs) >>> cell = new.to_image(dsize=dsize) >>> text = ub.urepr(kwargs, compact=1, nobr=1) >>> cell, info = kwimage.draw_text_on_image(cell, text, return_info=1, border={'thickness': 2}, color='white', fontScale=1.0) >>> to_show.append(cell) >>> # xdoctest: +REQUIRES(--show) >>> # xdoctest: +REQUIRES(module:kwplot) >>> import kwplot >>> kwplot.autompl() >>> canvas = kwimage.stack_images_grid(to_show) >>> canvas = kwimage.draw_header_text(canvas, 'kwimage.Color.adjust') >>> kwplot.imshow(canvas)

- class kwimage.Coords(data=None, meta=None)[source]¶

-

A data structure to store n-dimensional coordinate geometry.

Currently it is up to the user to maintain what coordinate system this geometry belongs to.

Note

This class was designed to hold coordinates in r/c format, but in general this class is anostic to dimension ordering as long as you are consistent. However, there are two places where this matters: (1) drawing and (2) gdal/imgaug-warping. In these places we will assume x/y for legacy reasons. This may change in the future.

The term axes with resepct to

Coordsalways refers to the final numpy axis. In other words the final numpy-axis represents ALL of the coordinate-axes.CommandLine

xdoctest -m kwimage.structs.coords Coords

Example

>>> from kwimage.structs.coords import * # NOQA >>> import kwarray >>> rng = kwarray.ensure_rng(0) >>> self = Coords.random(num=4, dim=3, rng=rng) >>> print('self = {}'.format(self)) self = <Coords(data= array([[0.5488135 , 0.71518937, 0.60276338], [0.54488318, 0.4236548 , 0.64589411], [0.43758721, 0.891773 , 0.96366276], [0.38344152, 0.79172504, 0.52889492]]))> >>> matrix = rng.rand(4, 4) >>> self.warp(matrix) <Coords(data= array([[0.71037426, 1.25229659, 1.39498435], [0.60799503, 1.26483447, 1.42073131], [0.72106004, 1.39057144, 1.38757508], [0.68384299, 1.23914654, 1.29258196]]))> >>> self.translate(3, inplace=True) <Coords(data= array([[3.5488135 , 3.71518937, 3.60276338], [3.54488318, 3.4236548 , 3.64589411], [3.43758721, 3.891773 , 3.96366276], [3.38344152, 3.79172504, 3.52889492]]))> >>> self.translate(3, inplace=True) <Coords(data= array([[6.5488135 , 6.71518937, 6.60276338], [6.54488318, 6.4236548 , 6.64589411], [6.43758721, 6.891773 , 6.96366276], [6.38344152, 6.79172504, 6.52889492]]))> >>> self.scale(2) <Coords(data= array([[13.09762701, 13.43037873, 13.20552675], [13.08976637, 12.8473096 , 13.29178823], [12.87517442, 13.783546 , 13.92732552], [12.76688304, 13.58345008, 13.05778984]]))> >>> # xdoctest: +REQUIRES(module:torch) >>> self.tensor() >>> self.tensor().tensor().numpy().numpy() >>> self.numpy() >>> #self.draw_on()

- property dtype¶

- property dim¶

- property shape¶

- classmethod random(num=1, dim=2, rng=None, meta=None)[source]¶

Makes random coordinates; typically for testing purposes

- compress(flags, axis=0, inplace=False)[source]¶

Filters items based on a boolean criterion

- Parameters:

flags (ArrayLike) – true for items to be kept. Extended type: ArrayLike[bool].

axis (int) – you usually want this to be 0

inplace (bool) – if True, modifies this object

- Returns:

filtered coords

- Return type:

Example

>>> import kwimage >>> self = kwimage.Coords.random(10, rng=0) >>> self.compress([True] * len(self)) >>> self.compress([False] * len(self)) <Coords(data=array([], shape=(0, 2), dtype=float64))> >>> # xdoctest: +REQUIRES(module:torch) >>> self = self.tensor() >>> self.compress([True] * len(self)) >>> self.compress([False] * len(self))

- take(indices, axis=0, inplace=False)[source]¶

Takes a subset of items at specific indices

- Parameters:

indices (ArrayLike) – indexes of items to take. Extended type ArrayLike[int].

axis (int) – you usually want this to be 0

inplace (bool) – if True, modifies this object

- Returns:

filtered coords

- Return type:

Example

>>> import kwimage >>> self = kwimage.Coords(np.array([[25, 30, 15, 10]])) >>> self.take([0]) <Coords(data=array([[25, 30, 15, 10]]))> >>> self.take([]) <Coords(data=array([], shape=(0, 4), dtype=...))>

- astype(dtype, inplace=False)[source]¶

Changes the data type

- Parameters:

dtype – new type

inplace (bool) – if True, modifies this object

- Returns:

modified coordinates

- Return type:

- round(decimals=0, inplace=False)[source]¶

Rounds data to the specified decimal place

- Parameters:

inplace (bool) – if True, modifies this object

decimals (int) – number of decimal places to round to

- Returns:

modified coordinates

- Return type:

Example

>>> import kwimage >>> self = kwimage.Coords.random(3).scale(10) >>> self.round()

- view(*shape)[source]¶

Passthrough method to view or reshape

- Parameters:

*shape – new shape of the data

- Returns:

modified coordinates

- Return type:

Example

>>> self = Coords.random(6, dim=4).numpy() >>> assert list(self.view(3, 2, 4).data.shape) == [3, 2, 4] >>> # xdoctest: +REQUIRES(module:torch) >>> self = Coords.random(6, dim=4).tensor() >>> assert list(self.view(3, 2, 4).data.shape) == [3, 2, 4]

- classmethod concatenate(coords, axis=0)[source]¶

Concatenates lists of coordinates together

- Parameters:

coords (Sequence[Coords]) – list of coords to concatenate

axis (int) – axis to stack on. Defaults to 0.

- Returns:

stacked coords

- Return type:

CommandLine

xdoctest -m kwimage.structs.coords Coords.concatenate

Example

>>> coords = [Coords.random(3) for _ in range(3)] >>> new = Coords.concatenate(coords) >>> assert len(new) == 9 >>> assert np.all(new.data[3:6] == coords[1].data)

- property device¶

If the backend is torch returns the data device, otherwise None

- property _impl¶

Returns the internal tensor/numpy ArrayAPI implementation

- tensor(device=NoParam)[source]¶

Converts numpy to tensors. Does not change memory if possible.

- Returns:

modified coordinates

- Return type:

Example

>>> # xdoctest: +REQUIRES(module:torch) >>> self = Coords.random(3).numpy() >>> newself = self.tensor() >>> self.data[0, 0] = 0 >>> assert newself.data[0, 0] == 0 >>> self.data[0, 0] = 1 >>> assert self.data[0, 0] == 1

- numpy()[source]¶

Converts tensors to numpy. Does not change memory if possible.

- Returns:

modified coordinates

- Return type:

Example

>>> # xdoctest: +REQUIRES(module:torch) >>> self = Coords.random(3).tensor() >>> newself = self.numpy() >>> self.data[0, 0] = 0 >>> assert newself.data[0, 0] == 0 >>> self.data[0, 0] = 1 >>> assert self.data[0, 0] == 1

- reorder_axes(new_order, inplace=False)[source]¶

Change the ordering of the coordinate axes.

- Parameters:

new_order (Tuple[int]) –

new_order[i]should specify which axes in the original coordinates should be mapped to thei-thposition in the returned axes.inplace (bool) – if True, modifies data inplace

- Returns:

modified coordinates

- Return type:

Note

This is the ordering of the “columns” in final numpy axis, not the numpy axes themselves.

Example

>>> from kwimage.structs.coords import * # NOQA >>> self = Coords(data=np.array([ >>> [7, 11], >>> [13, 17], >>> [21, 23], >>> ])) >>> new = self.reorder_axes((1, 0)) >>> print('new = {!r}'.format(new)) new = <Coords(data= array([[11, 7], [17, 13], [23, 21]]))>

Example

>>> from kwimage.structs.coords import * # NOQA >>> self = Coords.random(10, rng=0) >>> new = self.reorder_axes((1, 0)) >>> # Remapping using 1, 0 reverses the axes >>> assert np.all(new.data[:, 0] == self.data[:, 1]) >>> assert np.all(new.data[:, 1] == self.data[:, 0]) >>> # Remapping using 0, 1 does nothing >>> eye = self.reorder_axes((0, 1)) >>> assert np.all(eye.data == self.data) >>> # Remapping using 0, 0, destroys the 1-th column >>> bad = self.reorder_axes((0, 0)) >>> assert np.all(bad.data[:, 0] == self.data[:, 0]) >>> assert np.all(bad.data[:, 1] == self.data[:, 0])

- warp(transform, input_dims=None, output_dims=None, inplace=False)[source]¶

Generalized coordinate transform.

- Parameters:

transform (SKImageGeometricTransform | ArrayLike | Augmenter | Callable) – scikit-image tranform, a 3x3 transformation matrix, an imgaug Augmenter, or generic callable which transforms an NxD ndarray.